Anura Core SDK for Android

Anura Core SDK for Android provides all the essential components for integrating NuraLogix Affective AI and DeepAffex into your Android application. It includes DeepAffex Extraction Library, a DeepAffex Cloud API client, a camera control module, a face tracker, and other components to allow your application to take measurements using DeepAffex. This is the same SDK used to build Anura Lite by NuraLogix.

Anura Core SDK for Android has a pipeline architecture (Source, Pipe and Sink modules). A typical measurement pipeline is shown below:

Requirements

The latest version of the Anura Core SDK for Android is 2.4. It requires Android 7.1 (API Level 25) or higher. For a detailed list of requirements, please refer to the platform requirements chapter.

Getting Started

Accessing Anura Core SDK and Sample App

Please contact NuraLogix to get access to the private Git repository.

Setting up Maven access

Starting with Anura Core SDK 2.4.10, SDK dependencies are no longer bundled as

.aar files in the libs folder. Instead, dependencies are now fetched from Maven.

To download dependencies from Maven, you must authenticate with an access token:

-

Generate an Access Token:

- Open your account menu (top-right) → Settings.

- In the left menu, select Applications.

- Click Select Permissions, then enable:

- Repository → Read

- Package → Read

- Click Generate Token and save the token provided.

-

Get Your Username:

- Your Git username is listed under Settings → Profile in your git.deepaffex.ai Git account.

-

Configure Environment Variables:

-

Set the following environment variables for authentication:

export DFX_GIT_USERNAME="your-username" export DFX_GIT_TOKEN="your-access-token" -

Refer to

settings.gradlefor details on how to set up Maven access and dependency resolution.

-

Dependencies

Anura Core SDK for Android

You can set up project dependencies in your build.gradle as shown below:

// Anura Core SDK

implementation "ai.nuralogix:anurasdk:${anurasdkVersion}@aar"

implementation "ai.nuralogix.dfx:dfxsdk:${dfxVersion}"

implementation "ai.nuralogix.dfx.extras:dfxextras:${dfxExtrasVersion}"

implementation "ai.nuralogix:mediapipe-facemesh-android:${mediaPipeVersion}"

implementation "ai.nuralogix:anura-opencv:${opencvVersion}"

// Anura Core SDK Dependencies

implementation "org.jetbrains.kotlinx:kotlinx-serialization-json:$kotlinXJsonSerialization"

implementation "com.google.code.gson:gson:$gsonVersion"

implementation "org.java-websocket:Java-WebSocket:$websocketVersion"

DeepAffex License Key and Study ID

Before your application can connect to DeepAffex Cloud API, you will need a valid DeepAffex license key and Study ID, which can be found on DeepAffex Dashboard.

The included Sample App sets the DeepAffex license key and Study ID in

app/server.properties file, which would get included in the application at

build time.

Below is the included starting template app/server.properties.template:

# Caution: Do not use quotation marks when filling the values below.

# Your DeepAffex License Key and Study ID can be found on DeepAffex Dashboard

# https://dashboard.deepaffex.ai

DFX_LICENSE_KEY=

DFX_STUDY_ID=

# These URLs are for the International DeepAffex service.

# If the app is operating in Mainland China, replace "ai" with "cn"

# See more regional clusters: https://docs.deepaffex.ai/guide/cloud/2-1_regions.html

DFX_REST_URL=https://api.deepaffex.ai

DFX_WS_URL=wss://api.deepaffex.ai

You can then remove the .template suffix and you should be able to run sample

app and take a measurement.

Measurement Pipeline

Anura Core SDK for Android provides a MeasurementPipeline interface that

connects the various components of the SDK, from fetching camera video frames

all the way through rendering the video in the UI. It also provides listener

callback methods to observe the measurement state, and to receive and parse

results from DeepAffex Cloud.

The included Sample App demonstrates how to set up a MeasurementPipeline and

manage its lifecycle. The example provided in AnuraExampleMeasurementActivity

showcases how to use and setup MeasurementPipeline.

The setup of the MeasurementPipeline has a few components:

Listener

Please see section Handling Measurement State below on implementation details.

Core

The Core manages the threads and dispatching work to the various components of Anura Core SDK.

core = Core.createAnuraCore(this)

Face Tracker

By default we use the MediaPipeFaceTracker.

val display = resources.displayMetrics

val width = display.widthPixels

val height = display.heightPixels

faceTracker = MediaPipeFaceTracker(core.context)

faceTracker.setTrackingRegion(0, 0, width, height)

Renderer

Setting up the renderer requires 2 components, the VideoFormat and the Render.

The VideoFormat details the format of the camera video frames, while the Render is responsible for rendering the camera video frames in the UI.

Anura Core SDK includes a Render class based on OpenGL.

videoFormat = VideoFormat(

VideoFormat.ColorFormat.RGBA,

30,

IMAGE_HEIGHT.toInt(),

IMAGE_WIDTH.toInt()

)

val usingOvalUI = measurementUIConfig.measurementOutlineStyle ==

MeasurementUIConfiguration.MeasurementOutlineStyle.OVAL

render = Render.createGL20Render(videoFormat, usingOvalUI)

Configurations

A configurations map is passed to the pipeline to customize the behaviour of various stages of the MeasurementPipeline.

val configurations = HashMap<String, Configuration<*, *>>()

val cameraConfig = getCameraConfiguration()

val dfxPipeConfig = getDfxPipeConfiguration()

val renderingVideoSinkConfig = getRenderingVideoSinkConfig()

configurations[cameraConfig.id] = cameraConfig

configurations[dfxPipeConfig.id] = dfxPipeConfig

configurations[renderingVideoSinkConfig.id] = renderingVideoSinkConfig

The CameraConfiguration class will allow you to set a specific Camera ID to use to open the camera if the default behaviour is not suitable for your use case, along with changing the Camera Flip setting.

The RenderingVideoSinkConfig controls whether the histograms will be updated or not, if histograms are not turned on then this should be set to false.

val renderingVideoSinkConfig = RenderingVideoSinkConfig(application.applicationContext, null)

renderingVideoSinkConfig.setRuntimeParameter(

RenderingVideoSinkConfig.RuntimeKey.UPDATE_HISTOGRAM,

measurementUIConfig.showHistograms.toString()

)

The DfxPipeConfiguration is used to configure DeepAffex Extraction Library and the Constraint System.

By default, we set the following face constraints; presence, position, distance, rotation, and movement.

This can be easily toggled using;

val dfxPipeConfiguration = DfxPipeConfiguration(applicationContext, null)

dfxPipeConfiguration.setDefaultConstraints(true)

The behaviour of the constraints can be further customized using the setRuntimeParameter function of the DfxPipeConfiguration.

dfxPipeConfiguration.setRuntimeParameter(

DfxPipeConfiguration.RuntimeKey.CHECK_FACE_MOVEMENT,

false

)

Pipeline

We bring all of the components above together to create the pipeline as shown below.

measurementPipeline =

MeasurementPipeline.createMeasurementPipeline(

core,

MEASUREMENT_DURATION.toInt(), // default 30.0

videoFormat,

getStudyFileByteData(), // Study File as a ByteArray

render,

getConfigurations(), // customizations of the pipeline

faceTracker,

measurementPipelineListener

)

UI Components

The MeasurementView displays the various UI elements on top of the camera view (measurement outline, histograms, heart image, etc). You'll need to set the measurement duration and assign a renderer. See AnuraExampleMeasurementActivity for all of the behaviour handled by the MeasurementView.

measurementView.tracker.setMeasurementDuration(30.0)

measurementView.trackerImageView.setRenderer(render as Renderer)

Starting a Measurement

The MeasurementPipeline interface provides a simple function call to start a

measurement. The SDK will automatically handle making the API calls to

DeepAffex Cloud API over WebSocket:

- Create Measurement: Creates a measurement on DeepAffex Cloud and generates a Measurement ID.

- Subscribe to Results: Get results in real-time during a measurement, and when a measurement is complete.

- Add Data: Send facial blood-flow data collected by DeepAffex Extraction Library to DeepAffex Cloud for processing

Measurement Questionnaire and Partner ID

Your application can include user demographic information and medical history when taking a measurement:

val measurementQuestionnaire = MeasurementQuestionnaire().apply {

setSexAssignedAtBirth(SexAssignedAtBirth.Female())

setAge(23)

setHeightInCm(175)

setWeightInKg(60)

setDiabetes(Diabetes.Type2())

smoking = false

bloodPressureMedication = true

}

measurementPipeline.startMeasurement(

measurementQuestionnaire,

BuildConfig.DFX_STUDY_ID,

"example-partner-id-1"

)

Partner ID can hold a unique-per-user identifier, or any other value which could be used to link your application's end users with their measurements taken on DeepAffex Cloud. This is because your application's end users are considered anonymous users on DeepAffex Cloud.

For more information, please refer to:

Handling Measurement State

Your application can observe MeasurementState of MeasurementPipeline through

this MeasurementPipelineListener callback method:

fun onMeasurementStateLiveData(measurementState: LiveData<MeasurementState>?) { ... }

This allows your application to respond to events from MeasurementPipeline and

update the UI accordingly. Please refer to AnuraExampleMeasurementActivity for

an example on how to best handle MeasurementState changes.

Measurement Results

MeasurementPipelineListener provides 2 callback methods for getting

measurement results during and after a measurement is complete:

/**

* Called during a measurement

*/

fun onMeasurementPartialResult(payload: ChunkPayload?, results: MeasurementResults) { ... }

/**

* Called when a measurement is complete

*/

fun onMeasurementDone(payload: ChunkPayload?, results: MeasurementResults) { ... }

These callback methods pass a MeasurementResults instance that contains the

results of the current measurement so far, which can be accessed through 2

methods:

/**

* Returns all the current results for each signal ID in a `[String]: [SignalResult]` hash map

*/

val allResults : Map<String, SignalResult>

/**

* Get the current result for a [signalID]

* @return A Double value of the result. If the provided signal ID doesn't have a result,

* it returns `Double.NaN`

*/

fun result(signalID: String): Double

For a list of all the available results from DeepAffex and signal IDs, please refer to DeepAffex Points Reference.

Measurement Constraints

Anura Core SDK utilizes the

Constraints system of

DeepAffex Extraction Library to check the user's face position and movement,

and provide actionable feedback to the user to increase the chances of a

successful measurement. MeasurementPipelineListener provides a callback method

to check for constraint violations:

fun onMeasurementConstraint(

isMeasuring: Boolean,

status: ConstraintResult.ConstraintStatus,

constraints: MutableMap<String, ConstraintResult.ConstraintStatus>

) {

...

}

Please refer to AnuraExampleMeasurementActivity for an example on how to best

handle constraint violations and display feedback to the user.

Signal-to-Noise Ratio and Early Measurement Cancellation

DeepAffex Cloud will occasionally fail to return a measurement result. This usually occurs when the signal-to-noise ratio (SNR) of the extracted blood-flow signal isn't good enough due to insufficient lighting or too much user or camera movement.

In such a scenario, your application might prefer to cancel the measurement early and provide feedback to the user to improve the measurement conditions.

MeasurementPipeline interface provides a method to check the SNR of the

measurement and determine if the measurement should be cancelled:

fun shouldCancelMeasurement(SNR: Double, chunkOrder: Int): Boolean

The SNR of the measurement so far is available from MeasurementResults. Below

is an example of how to cancel a measurement early:

fun onMeasurementPartialResult(payload: ChunkPayload?, results: MeasurementResults) {

...

if (measurementPipeline.shouldCancelMeasurement(results.snr, results.chunkOrder)) {

stopMeasurement()

// Show feedback to the user that the measurement was cancelled early

return

}

...

}

Measurement UI Customization

The measurement UI can be customized through MeasurementUIConfiguration. By

default, MeasurementUIConfiguration will provide a measurement UI similar to

the one in Anura Lite by NuraLogix.

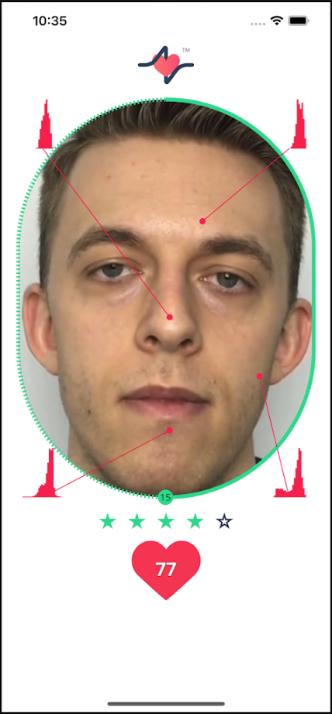

Below is an example of the parameters that can be adjusted by

MeasurementUIConfiguration:

val customMeasurementUIConfig = MeasurementUIConfiguration().apply {

measurementOutlineStyle = MeasurementUIConfiguration.MeasurementOutlineStyle.OVAL

showHistograms = true

overlayBackgroundColor = Color.WHITE

measurementOutlineActiveColor = Color.BLACK

measurementOutlineInactiveColor = Color.WHITE

histogramActiveColor = Color.YELLOW

histogramInactiveColor = Color.GREEN

statusMessagesTextColor = Color.CYAN

timerTextColor = Color.BLUE

}

measurementView.setMeasurementUIConfiguration(customMeasurementUIConfig)

The MeasurementView class also provides some helper methods to modify the icon at the top, the methods are;

with(measurementView) {

showAnuraIcon(boolean show)

setIconImageResource(int resourceId)

setIconHeightFromTop(int marginTop)

}

A screenshot is shown below: