Anura Core SDK Developer Guide

This developer guide will help you effectively use the NuraLogix Anura Core SDK to build your own mobile applications for Affective AI. Here you will find step-by-step instructions to get you started and also more detailed references as you delve deeper.

The Introduction provides an architectural overview of the Core SDK.

Chapter 2 provides details about the contents of the Android and iOS core packages. Chapters 3 and 4 walk you through setup, configuration and basic usage of Anura Core SDK for Android and iOS respectively. Chapter 5 talks about the sample applications that ships with the SDK.

Chapter 6 explains the details of adapting third-party face trackers and alternate camera modules.

Chapter 7 provides some details about the user profile questionnaire.

Chapter 8 discusses how to interpret the results returned by the DeepAffex Cloud.

Chapter 9 highlights some best practices.

Chapter 10 provides pointers to cross-platform app development.

Chapter 11 provides recommendations about the platform requirements.

Chapter 12 provides a troubleshooting guide.

Chapter 13 provides a migration for Anura Core SDK Android 2.4.0

Chapter 14 provides a migration for Anura Core SDK iOS 1.9.0

Finally, there is an FAQ section containing answers to some of the questions that you may have and a chapter on Release Notes.

Last updated on 2025-10-29 by Vineet Puri (v1.23.0)

Disclaimer

DeepAffex is not a medical device and should not be a used as a substitute for clinical judgment by a health care professional. DeepAffex is intended to improve your awareness of general wellness. DeepAffex is not intended to diagnose, treat, mitigate or prevent any disease, symptom, disorder or abnormal physical condition. Consult with a health care professional or emergency services if you believe you may have a medical issue.

Trademarks

NuraLogix, Anura, DeepAffex and other trademarks are trademarks of NuraLogix Corporation or its subsidiaries.

The following is a non-exhaustive and illustrative list of trademarks owned by NuraLogix Corporation and its subsidiaries.

- Affective Ai™

- nuralogix®

- Anura®

- DeepAffex®

- TOI®

- Anura® - Telehealth

- Anura® - MagicMirror

Introduction

Before you begin, we recommend perusing the DeepAffex Developer Guide so that you have a basic understanding of NuraLogix's DeepAffex technologies and terminologies. Please be sure to read the first two chapters - Introduction and Getting Started.

Anura Core SDK

NuraLogix provides the DeepAffex Cloud for Affective AI. It is used to analyze facial blood-flow information that is extracted from image streams using the DeepAffex Extraction Library.

Anura Core SDK is a mobile software development kit designed to help developers quickly add NuraLogix Affective AI to in-house or commercial mobile applications. It contains the DeepAffex Extraction Library and also provides all additional features required for doing DeepAffex analysis on mobile platforms - camera capture and preview, face tracker integration and a cloud module for interacting with the DeepAffex Cloud etc.

Anura Core SDK is available on both Android and iOS and is distributed privately via secure Git repositories to companies in partnership with NuraLogix.

It is canonically documented inside the Android and iOS Git repositories respectively.

Architecture

The architecture of typical Anura Core SDK-based application is shown below:

Localization

For demonstration purposes, the Anura Core SDK Sample App includes a subset of the UI prompts used by NuraLogix Anura. You can change these prompts and add more according the needs of your app and it's users.

Anura Core SDK package manifest

Package contents of Anura Core SDK for Android

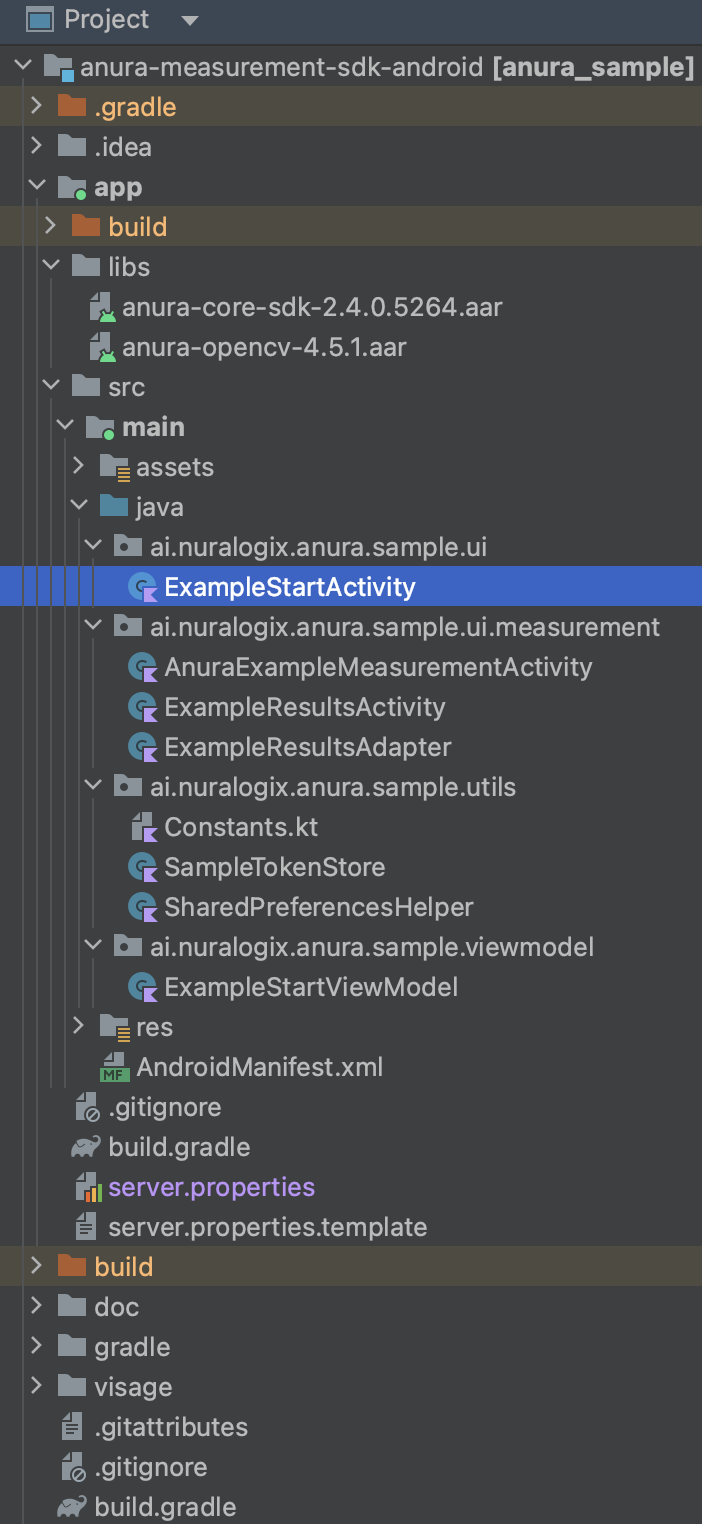

The Anura Core SDK for Android sample project looks like:

Binaries included

-

Anura Core SDK (~26.9 MB): includes arm64-v8a DFX SDK native libraries

-

OpenCV (~6 MB): a streamlined OpenCV package

The sizes listed above refer to uncompressed binary files; the actual increase in your application's size won't match these figures exactly. For instance, compiling the Sample App with all the dependencies for Android yields a .apk file of approximately 44 MB.

Source included

-

ExampleStartActivity: An Activity for entering user demographics information and Partner ID. It also demonstrates communicating with DeepAffex Cloud API to register the license and validate the device token -

AnuraExampleMeasurementActivity: The main Activity with the measurement UI that demonstrates how to use Anura Core APIs, and it is similar to the NuraLogix Anura app -

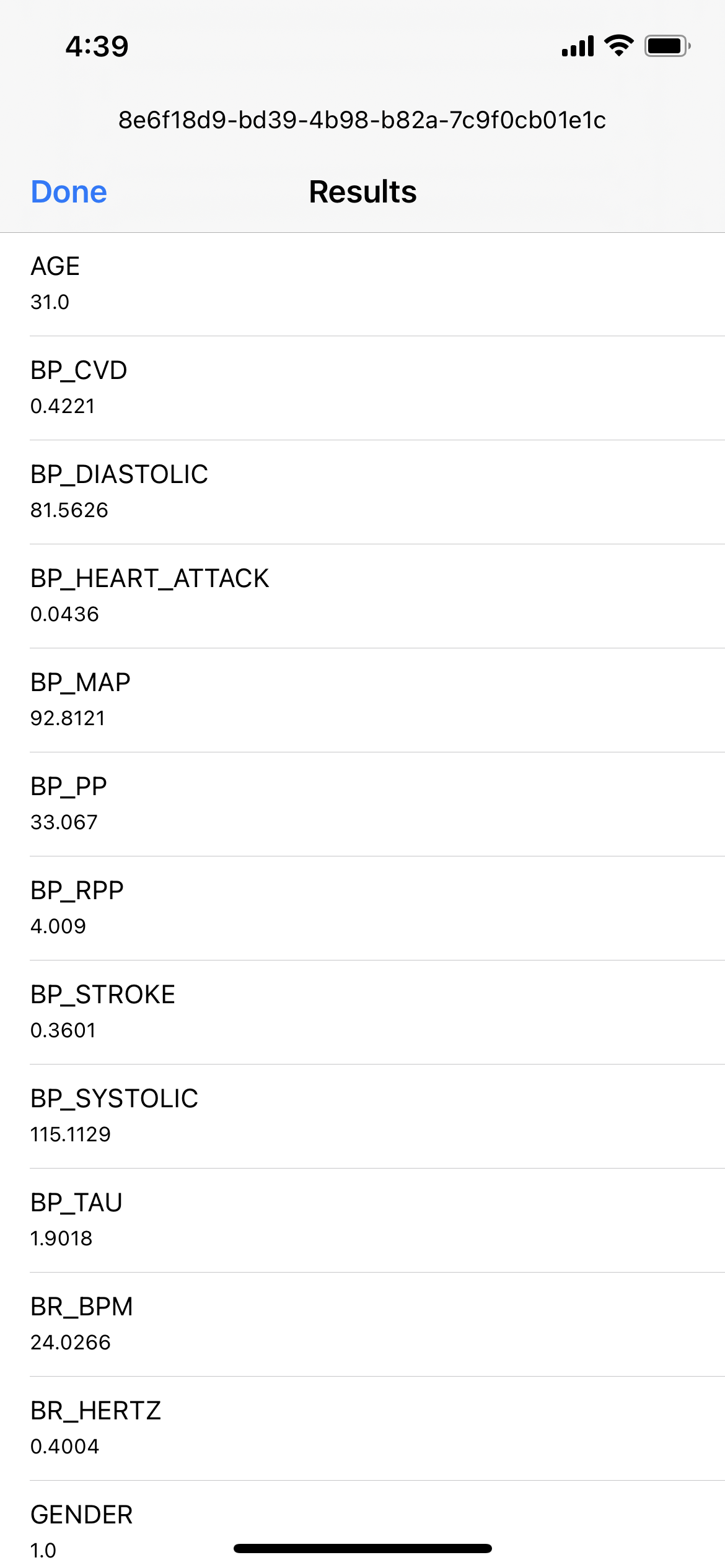

ExampleResultsActivity: An example activity that displays all of the results received from DeepAffex Cloud in a list -

SampleTokenStore: A sample implementation of theTokenStorerequired for Anura Core to handle expired/invalid tokens and to renew them automatically

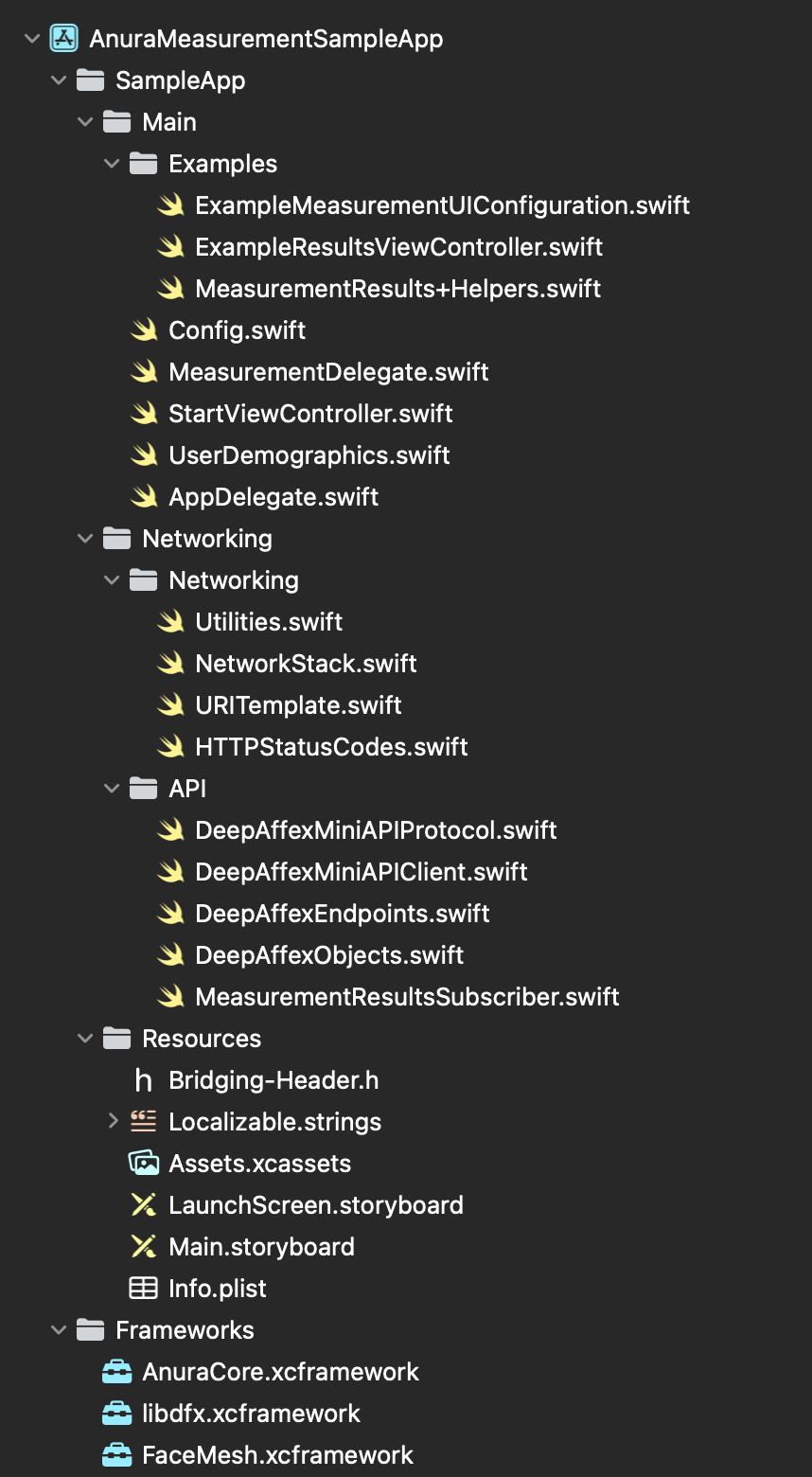

Package contents of Anura Core SDK for iOS

The Anura Core SDK for iOS sample project looks like:

Binaries included

-

AnuraCore.xcframework(~4.6 MB): Anura Core dynamic framework -

libdfx.xcframework(~10.5 MB): DeepAffex Extraction Library dynamic framework -

FaceMesh.xcframework(~26.6 MB): MediaPipe FaceMesh dynamic framework

The sizes listed above refer to uncompressed binary files; the actual increase in your application's size won't match these figures exactly. For instance, compiling the Sample App with all the dependencies for iOS yields a .ipa file of approximately 17 MB.

Sources included

-

Config: A configuration file used by the Sample App to set the application's DeepAffex configuration: license key, study ID, and API hostname -

StartViewController: A UIViewController for entering user demographic information and Partner ID. It also demonstrates how to setupAnuraMeasurementViewControllerandDeepAffexMiniAPIClientto begin taking measurements using DeepAffex Cloud. -

UserDemographics: A struct that validates user demographic information -

MeasurementUIConfiguration+CustomTheme: an example theme to show how to customize the measurement UI -

MeasurementDelegate: An example class to respond to various events from DeepAffex Cloud and AnuraCore to customize the app behaviour -

MeasurementResultsSubscriber: Creates and Manages a WebSocket connection to DeepAffex Cloud API to subscribe to realtime results -

ExampleResultsViewController: An example UIViewController that displays all of the results received from DeepAffex Cloud in a list

Anura Core SDK for Android

Anura Core SDK for Android provides all the essential components for integrating NuraLogix Affective AI and DeepAffex into your Android application. It includes DeepAffex Extraction Library, a DeepAffex Cloud API client, a camera control module, a face tracker, and other components to allow your application to take measurements using DeepAffex. This is the same SDK used to build Anura Lite by NuraLogix.

Anura Core SDK for Android has a pipeline architecture (Source, Pipe and Sink modules). A typical measurement pipeline is shown below:

Requirements

The latest version of the Anura Core SDK for Android is 2.4. It requires Android 7.1 (API Level 25) or higher. For a detailed list of requirements, please refer to the platform requirements chapter.

Getting Started

Accessing Anura Core SDK and Sample App

Please contact NuraLogix to get access to the private Git repository.

Setting up Maven access

Starting with Anura Core SDK 2.4.10, SDK dependencies are no longer bundled as

.aar files in the libs folder. Instead, dependencies are now fetched from Maven.

To download dependencies from Maven, you must authenticate with an access token:

-

Generate an Access Token:

- Open your account menu (top-right) → Settings.

- In the left menu, select Applications.

- Click Select Permissions, then enable:

- Repository → Read

- Package → Read

- Click Generate Token and save the token provided.

-

Get Your Username:

- Your Git username is listed under Settings → Profile in your git.deepaffex.ai Git account.

-

Configure Environment Variables:

-

Set the following environment variables for authentication:

export DFX_GIT_USERNAME="your-username" export DFX_GIT_TOKEN="your-access-token" -

Refer to

settings.gradlefor details on how to set up Maven access and dependency resolution.

-

Dependencies

Anura Core SDK for Android

You can set up project dependencies in your build.gradle as shown below:

// Anura Core SDK

implementation "ai.nuralogix:anurasdk:${anurasdkVersion}@aar"

implementation "ai.nuralogix.dfx:dfxsdk:${dfxVersion}"

implementation "ai.nuralogix.dfx.extras:dfxextras:${dfxExtrasVersion}"

implementation "ai.nuralogix:mediapipe-facemesh-android:${mediaPipeVersion}"

implementation "ai.nuralogix:anura-opencv:${opencvVersion}"

// Anura Core SDK Dependencies

implementation "org.jetbrains.kotlinx:kotlinx-serialization-json:$kotlinXJsonSerialization"

implementation "com.google.code.gson:gson:$gsonVersion"

implementation "org.java-websocket:Java-WebSocket:$websocketVersion"

DeepAffex License Key and Study ID

Before your application can connect to DeepAffex Cloud API, you will need a valid DeepAffex license key and Study ID, which can be found on DeepAffex Dashboard.

The included Sample App sets the DeepAffex license key and Study ID in

app/server.properties file, which would get included in the application at

build time.

Below is the included starting template app/server.properties.template:

# Caution: Do not use quotation marks when filling the values below.

# Your DeepAffex License Key and Study ID can be found on DeepAffex Dashboard

# https://dashboard.deepaffex.ai

DFX_LICENSE_KEY=

DFX_STUDY_ID=

# These URLs are for the International DeepAffex service.

# If the app is operating in Mainland China, replace "ai" with "cn"

# See more regional clusters: https://docs.deepaffex.ai/guide/cloud/2-1_regions.html

DFX_REST_URL=https://api.deepaffex.ai

DFX_WS_URL=wss://api.deepaffex.ai

You can then remove the .template suffix and you should be able to run sample

app and take a measurement.

Measurement Pipeline

Anura Core SDK for Android provides a MeasurementPipeline interface that

connects the various components of the SDK, from fetching camera video frames

all the way through rendering the video in the UI. It also provides listener

callback methods to observe the measurement state, and to receive and parse

results from DeepAffex Cloud.

The included Sample App demonstrates how to set up a MeasurementPipeline and

manage its lifecycle. The example provided in AnuraExampleMeasurementActivity

showcases how to use and setup MeasurementPipeline.

The setup of the MeasurementPipeline has a few components:

Listener

Please see section Handling Measurement State below on implementation details.

Core

The Core manages the threads and dispatching work to the various components of Anura Core SDK.

core = Core.createAnuraCore(this)

Face Tracker

By default we use the MediaPipeFaceTracker.

val display = resources.displayMetrics

val width = display.widthPixels

val height = display.heightPixels

faceTracker = MediaPipeFaceTracker(core.context)

faceTracker.setTrackingRegion(0, 0, width, height)

Renderer

Setting up the renderer requires 2 components, the VideoFormat and the Render.

The VideoFormat details the format of the camera video frames, while the Render is responsible for rendering the camera video frames in the UI.

Anura Core SDK includes a Render class based on OpenGL.

videoFormat = VideoFormat(

VideoFormat.ColorFormat.RGBA,

30,

IMAGE_HEIGHT.toInt(),

IMAGE_WIDTH.toInt()

)

val usingOvalUI = measurementUIConfig.measurementOutlineStyle ==

MeasurementUIConfiguration.MeasurementOutlineStyle.OVAL

render = Render.createGL20Render(videoFormat, usingOvalUI)

Configurations

A configurations map is passed to the pipeline to customize the behaviour of various stages of the MeasurementPipeline.

val configurations = HashMap<String, Configuration<*, *>>()

val cameraConfig = getCameraConfiguration()

val dfxPipeConfig = getDfxPipeConfiguration()

val renderingVideoSinkConfig = getRenderingVideoSinkConfig()

configurations[cameraConfig.id] = cameraConfig

configurations[dfxPipeConfig.id] = dfxPipeConfig

configurations[renderingVideoSinkConfig.id] = renderingVideoSinkConfig

The CameraConfiguration class will allow you to set a specific Camera ID to use to open the camera if the default behaviour is not suitable for your use case, along with changing the Camera Flip setting.

The RenderingVideoSinkConfig controls whether the histograms will be updated or not, if histograms are not turned on then this should be set to false.

val renderingVideoSinkConfig = RenderingVideoSinkConfig(application.applicationContext, null)

renderingVideoSinkConfig.setRuntimeParameter(

RenderingVideoSinkConfig.RuntimeKey.UPDATE_HISTOGRAM,

measurementUIConfig.showHistograms.toString()

)

The DfxPipeConfiguration is used to configure DeepAffex Extraction Library and the Constraint System.

By default, we set the following face constraints; presence, position, distance, rotation, and movement.

This can be easily toggled using;

val dfxPipeConfiguration = DfxPipeConfiguration(applicationContext, null)

dfxPipeConfiguration.setDefaultConstraints(true)

The behaviour of the constraints can be further customized using the setRuntimeParameter function of the DfxPipeConfiguration.

dfxPipeConfiguration.setRuntimeParameter(

DfxPipeConfiguration.RuntimeKey.CHECK_FACE_MOVEMENT,

false

)

Pipeline

We bring all of the components above together to create the pipeline as shown below.

measurementPipeline =

MeasurementPipeline.createMeasurementPipeline(

core,

MEASUREMENT_DURATION.toInt(), // default 30.0

videoFormat,

getStudyFileByteData(), // Study File as a ByteArray

render,

getConfigurations(), // customizations of the pipeline

faceTracker,

measurementPipelineListener

)

UI Components

The MeasurementView displays the various UI elements on top of the camera view (measurement outline, histograms, heart image, etc). You'll need to set the measurement duration and assign a renderer. See AnuraExampleMeasurementActivity for all of the behaviour handled by the MeasurementView.

measurementView.tracker.setMeasurementDuration(30.0)

measurementView.trackerImageView.setRenderer(render as Renderer)

Starting a Measurement

The MeasurementPipeline interface provides a simple function call to start a

measurement. The SDK will automatically handle making the API calls to

DeepAffex Cloud API over WebSocket:

- Create Measurement: Creates a measurement on DeepAffex Cloud and generates a Measurement ID.

- Subscribe to Results: Get results in real-time during a measurement, and when a measurement is complete.

- Add Data: Send facial blood-flow data collected by DeepAffex Extraction Library to DeepAffex Cloud for processing

Measurement Questionnaire and Partner ID

Your application can include user demographic information and medical history when taking a measurement:

val measurementQuestionnaire = MeasurementQuestionnaire().apply {

setSexAssignedAtBirth(SexAssignedAtBirth.Female())

setAge(23)

setHeightInCm(175)

setWeightInKg(60)

setDiabetes(Diabetes.Type2())

smoking = false

bloodPressureMedication = true

}

measurementPipeline.startMeasurement(

measurementQuestionnaire,

BuildConfig.DFX_STUDY_ID,

"example-partner-id-1"

)

Partner ID can hold a unique-per-user identifier, or any other value which could be used to link your application's end users with their measurements taken on DeepAffex Cloud. This is because your application's end users are considered anonymous users on DeepAffex Cloud.

For more information, please refer to:

Handling Measurement State

Your application can observe MeasurementState of MeasurementPipeline through

this MeasurementPipelineListener callback method:

fun onMeasurementStateLiveData(measurementState: LiveData<MeasurementState>?) { ... }

This allows your application to respond to events from MeasurementPipeline and

update the UI accordingly. Please refer to AnuraExampleMeasurementActivity for

an example on how to best handle MeasurementState changes.

Measurement Results

MeasurementPipelineListener provides 2 callback methods for getting

measurement results during and after a measurement is complete:

/**

* Called during a measurement

*/

fun onMeasurementPartialResult(payload: ChunkPayload?, results: MeasurementResults) { ... }

/**

* Called when a measurement is complete

*/

fun onMeasurementDone(payload: ChunkPayload?, results: MeasurementResults) { ... }

These callback methods pass a MeasurementResults instance that contains the

results of the current measurement so far, which can be accessed through 2

methods:

/**

* Returns all the current results for each signal ID in a `[String]: [SignalResult]` hash map

*/

val allResults : Map<String, SignalResult>

/**

* Get the current result for a [signalID]

* @return A Double value of the result. If the provided signal ID doesn't have a result,

* it returns `Double.NaN`

*/

fun result(signalID: String): Double

For a list of all the available results from DeepAffex and signal IDs, please refer to DeepAffex Points Reference.

Measurement Constraints

Anura Core SDK utilizes the

Constraints system of

DeepAffex Extraction Library to check the user's face position and movement,

and provide actionable feedback to the user to increase the chances of a

successful measurement. MeasurementPipelineListener provides a callback method

to check for constraint violations:

fun onMeasurementConstraint(

isMeasuring: Boolean,

status: ConstraintResult.ConstraintStatus,

constraints: MutableMap<String, ConstraintResult.ConstraintStatus>

) {

...

}

Please refer to AnuraExampleMeasurementActivity for an example on how to best

handle constraint violations and display feedback to the user.

Signal-to-Noise Ratio and Early Measurement Cancellation

DeepAffex Cloud will occasionally fail to return a measurement result. This usually occurs when the signal-to-noise ratio (SNR) of the extracted blood-flow signal isn't good enough due to insufficient lighting or too much user or camera movement.

In such a scenario, your application might prefer to cancel the measurement early and provide feedback to the user to improve the measurement conditions.

MeasurementPipeline interface provides a method to check the SNR of the

measurement and determine if the measurement should be cancelled:

fun shouldCancelMeasurement(SNR: Double, chunkOrder: Int): Boolean

The SNR of the measurement so far is available from MeasurementResults. Below

is an example of how to cancel a measurement early:

fun onMeasurementPartialResult(payload: ChunkPayload?, results: MeasurementResults) {

...

if (measurementPipeline.shouldCancelMeasurement(results.snr, results.chunkOrder)) {

stopMeasurement()

// Show feedback to the user that the measurement was cancelled early

return

}

...

}

Measurement UI Customization

The measurement UI can be customized through MeasurementUIConfiguration. By

default, MeasurementUIConfiguration will provide a measurement UI similar to

the one in Anura Lite by NuraLogix.

Below is an example of the parameters that can be adjusted by

MeasurementUIConfiguration:

val customMeasurementUIConfig = MeasurementUIConfiguration().apply {

measurementOutlineStyle = MeasurementUIConfiguration.MeasurementOutlineStyle.OVAL

showHistograms = true

overlayBackgroundColor = Color.WHITE

measurementOutlineActiveColor = Color.BLACK

measurementOutlineInactiveColor = Color.WHITE

histogramActiveColor = Color.YELLOW

histogramInactiveColor = Color.GREEN

statusMessagesTextColor = Color.CYAN

timerTextColor = Color.BLUE

}

measurementView.setMeasurementUIConfiguration(customMeasurementUIConfig)

The MeasurementView class also provides some helper methods to modify the icon at the top, the methods are;

with(measurementView) {

showAnuraIcon(boolean show)

setIconImageResource(int resourceId)

setIconHeightFromTop(int marginTop)

}

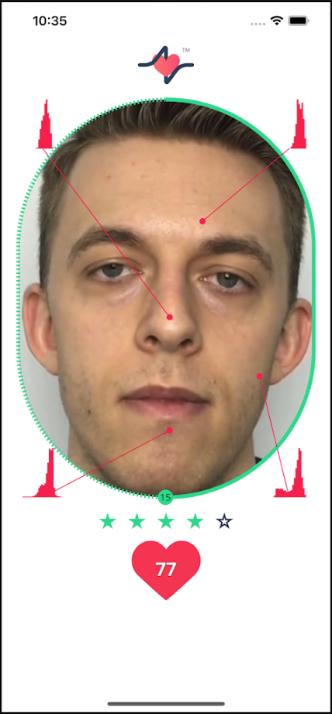

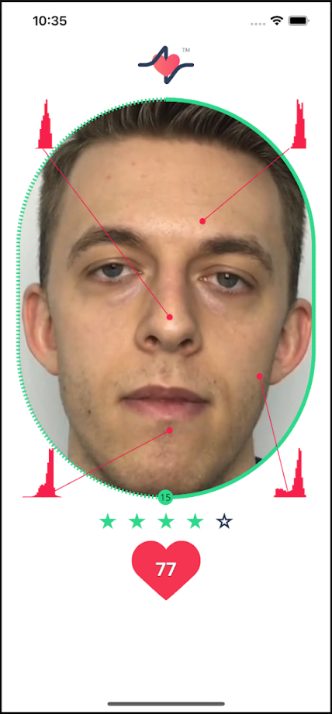

A screenshot is shown below:

Anura Core SDK for iOS

Anura Core SDK for iOS provides all the essential components for integrating NuraLogix Affective AI and DeepAffex into your iOS application. It includes DeepAffex Extraction Library, a DeepAffex Cloud API client, a camera control module, a face tracker, and other components to allow your application to take measurements using DeepAffex. This is the same SDK used to build Anura Lite by NuraLogix.

Requirements

The latest version of the Anura Core SDK for iOS is 1.9.2. It requires Apple iOS 13.0 or higher. For a detailed list of requirements, please refer to the platform requirements chapter.

Setup

Accessing Anura Core SDK and Sample App

Please contact NuraLogix to get access to the private Git repository.

Dependencies

-

Run

pod installunder the root folder of the sample project. If you start your own project, please configure yourpodfileaccordingly. -

Anura Core SDK for iOS frameworks are included in

./Frameworks. They are already configured in sample project's target Build Settings. If you start your own project, please configure the frameworks in your project's target Build Settings.

Using the Core SDK

DeepAffex License Key and Study ID

Before your application can connect to DeepAffex Cloud API, you will need a valid DeepAffex license key and Study ID, which can be found on DeepAffex Dashboard.

The included Sample App sets the DeepAffex license key and Study ID in

Config.swift file, which would get included in the application at

build time.

AnuraMeasurementViewController

Anura Core SDK for iOS provides a AnuraMeasurementViewController class

that integrates the various components of the SDK, from fetching camera video

frames all the way through rendering the video in the UI. It also provides

delegate methods to observe the measurement state and lifecycle.

Measurement Constraints

Anura Core SDK utilizes the Constraints system of DeepAffex Extraction Library to check the user's face position and movement, and provide actionable feedback to the user to increase the chances of a successful measurement.

You can use the MeasurementConfiguration object when instantiating a

AnuraMeasurementViewController to configure the default set of constraints.

The flags and their explanation are given below:

/// A Boolean value that determines whether the default face position and movement

/// constraints before the measurement starts are enabled — Default is `true`

public var defaultConstraintsEnabled: Bool

/// A Boolean value that determines whether the default face position and movement

/// constraints during the measurement are enabled — Default is `false`

public var defaultConstraintsDuringMeasurementEnabled: Bool

/// A Boolean value that determines whether the lighting quality constraint is

/// enabled — Default is `false`

public var lightingQualityConstraintEnabled: Bool

If the default configuration is not sufficient, then you can manually configure

the constraits by calling the following methods on a AnuraMeasurementViewController:

/// Enable a DeepAffex Extraction Library constraint

/// - Parameter key: The constraint key

public func enableConstraint(_ key: String)

/// Disable a DeepAffex Extraction Library constraint

/// - Parameter key: The constraint key

public func disableConstraint(_ key: String)

/// Sets the value of a DeepAffex Extraction Library constraint

/// - Parameter key: The constraint key

/// - Parameter value: The value of the constraint

public func setConstraint(key: String, value: String)

In the iOS Sample App, you can also refer to

MeasurementDelegate.swift to know how to configure constraints.

func anuraMeasurementControllerDidLoad(_ controller: AnuraMeasurementViewController) {

print("***** anuraMeasurementControllerDidLoad")

// Create a MeasurementResultsSubscriber object to receive live results during a measurement

self.measurementResultsSubscriber = MeasurementResultsSubscriber(token: api.token)

self.measurementResultsSubscriber.delegate = self

// Store weak reference to AnuraMeasurementViewController to use it to decode measurement results

self.measurementController = controller

// This is where constraints can be configured

// Example:

//

// controller.enableConstraint("checkBackLight")

// controller.setConstraint(key: "maxMovement_mm", value: "6")

}

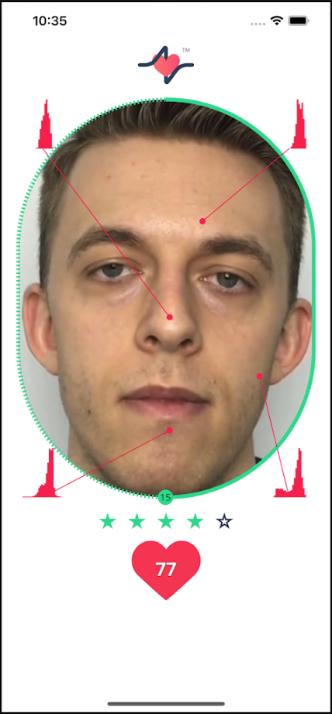

Measurement UI Customization

The exact measurement UI from the Anura App is available in

AnuraMeasurementViewController (including stars, beating heart etc.) You can

programmatically show or hide any element on the screen through

MeasurementUIConfiguration. If you want to create your own UI, can you use

MeasurementUIConfiguration to hide all the default UI elements and implement

your own UI and place them on top of the camera view.

@objc @objcMembers public class MeasurementUIConfiguration : NSObject {

......

@objc public var showHeartRateDuringMeasurement: Bool

@objc public var showHistograms: Bool

@objc public var showStatusMessages: Bool

@objc public var showMeasurementStartedMessage: Bool

@objc public var showCountdown: Bool

@objc public var showOverlay: Bool

@objc public var showMeasurementOutline: Bool

@objc public var showLightingQualityStars: Bool

@objc public var showFacePolygons: Bool

......

}

And you can also customize the font and color of some elements according to your needs:

static var exampleCustomTheme : MeasurementUIConfiguration {

......

// Customize Fonts

uiConfig.statusMessagesFont = UIFont(name: "AmericanTypewriter", size: 25)!

uiConfig.countdownFont = UIFont(name: "AmericanTypewriter", size: 80)!

uiConfig.heartRateFont = UIFont(name: "AmericanTypewriter-Bold", size: 30)!

uiConfig.timerFont = UIFont(name: "AmericanTypewriter-Bold", size: 10)!

// Customize Colors

let greenColor = UIColor(red: 0, green: 0.77, blue: 0.42, alpha: 1.0)

let darkMagentaColor = UIColor(red: 0.77, green: 0, blue: 0.35, alpha: 1.0)

uiConfig.overlayBackgroundColor = UIColor(red: 0.2, green: 0, blue: 0.09, alpha: 0.75)

uiConfig.measurementOutlineInactiveColor = .darkGray

uiConfig.measurementOutlineActiveColor = darkMagentaColor

uiConfig.heartRateShapeColor = greenColor

uiConfig.lightingQualityStarsActiveColor = darkMagentaColor

uiConfig.lightingQualityStarsInactiveColor = .darkGray

uiConfig.statusMessagesTextColor = .white

uiConfig.statusMessagesTextShadowColor = .black

uiConfig.timerTextColor = .white

uiConfig.heartRateTextColor = .white

uiConfig.heartRateTextShadowColor = .black

uiConfig.histogramActiveColor = greenColor

uiConfig.histogramInactiveColor = darkMagentaColor

......

}

You can refer to MeasurementUIConfiguration+CustomTheme file for an example

A screenshot of the default measurement UI is shown below:

Sample Applications

Anura Core SDK for Android and iOS each includes a basic Sample App. These apps demonstrate how to use the SDK, and can be used as a reference to build your own apps.

The Sample App is provided as part of the delivered product package. Use of the Sample App requires a valid DeepAffex® license. For licensing inquiries or to obtain access, please contact our Sales team.

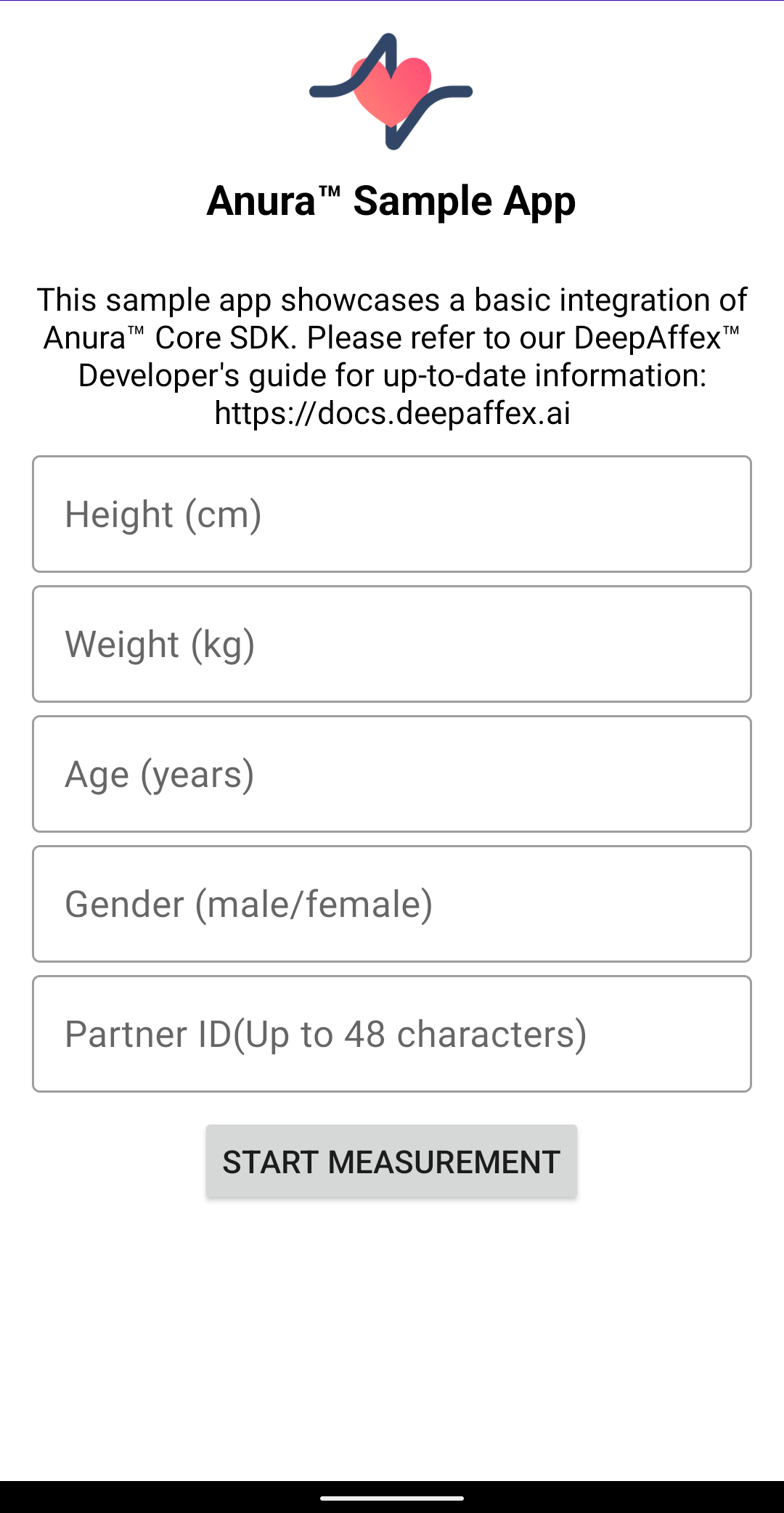

Anura Core Android Sample App

The Sample App for Android showcases a basic integration of Anura Core in Kotlin. The full source code of the application is included, and it demonstrates how to:

- Setup app configuration including DeepAffex License and Study ID in

server.properties. - Communicate with DeepAffex Cloud API:

- Register your DeepAffex license key

- Validate API access token, and refresh token if need

- Get DeepAffex study config file

- Initialize the measurement pipeline in

AnuraExampleMeasurementActivity - Pass user provided demographic information (age, sex at birth, height, and weight) as part of the measurement data.

- Utilize

MeasurementResultsto get results from DeepAffex to display

Anura Core SDK for Android does not include any UI components for displaying results. For more information on how to interpret the results, including colour codes and value ranges for each result, please refer to the DFX Points Reference.

Screenshots

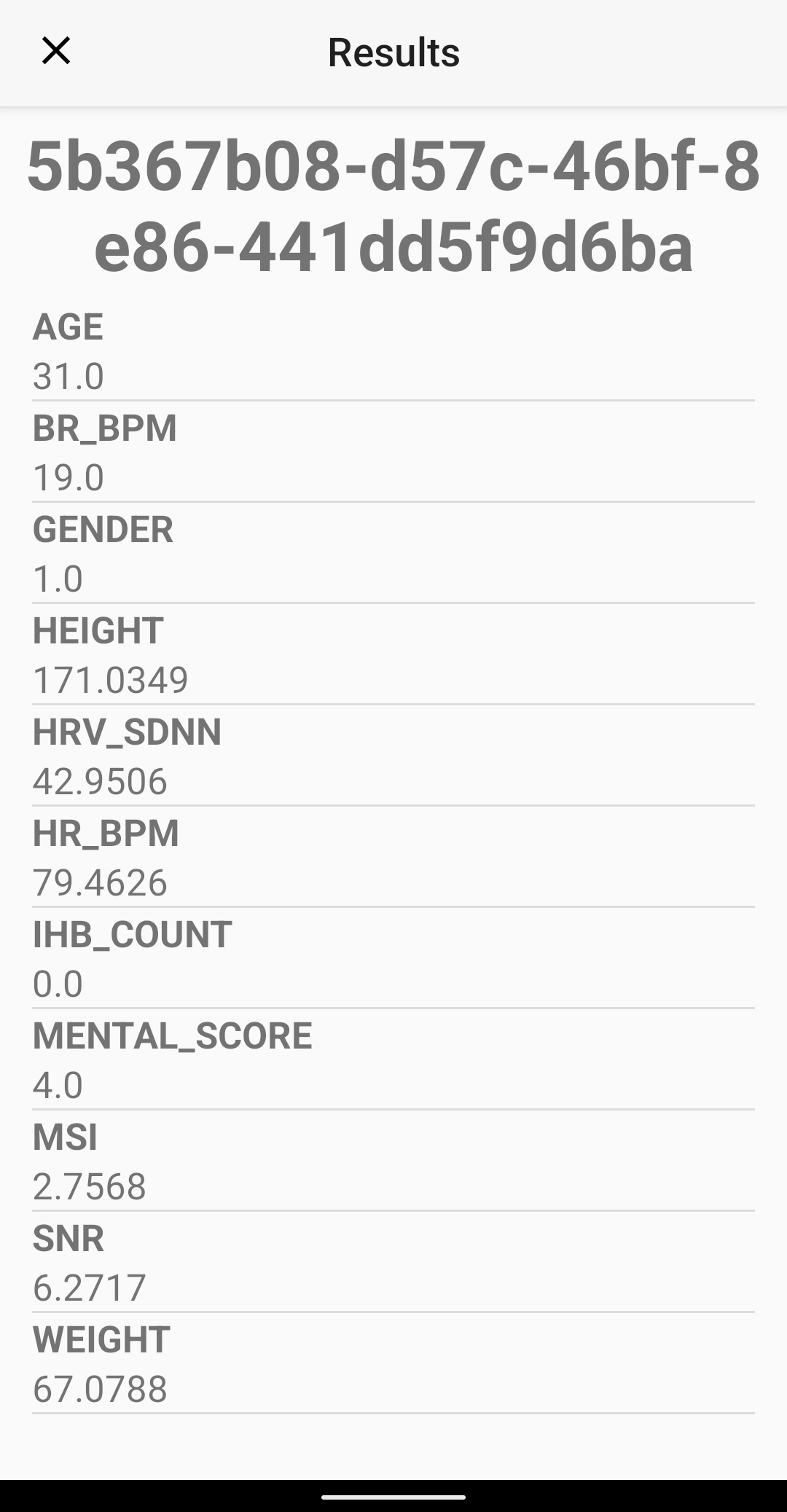

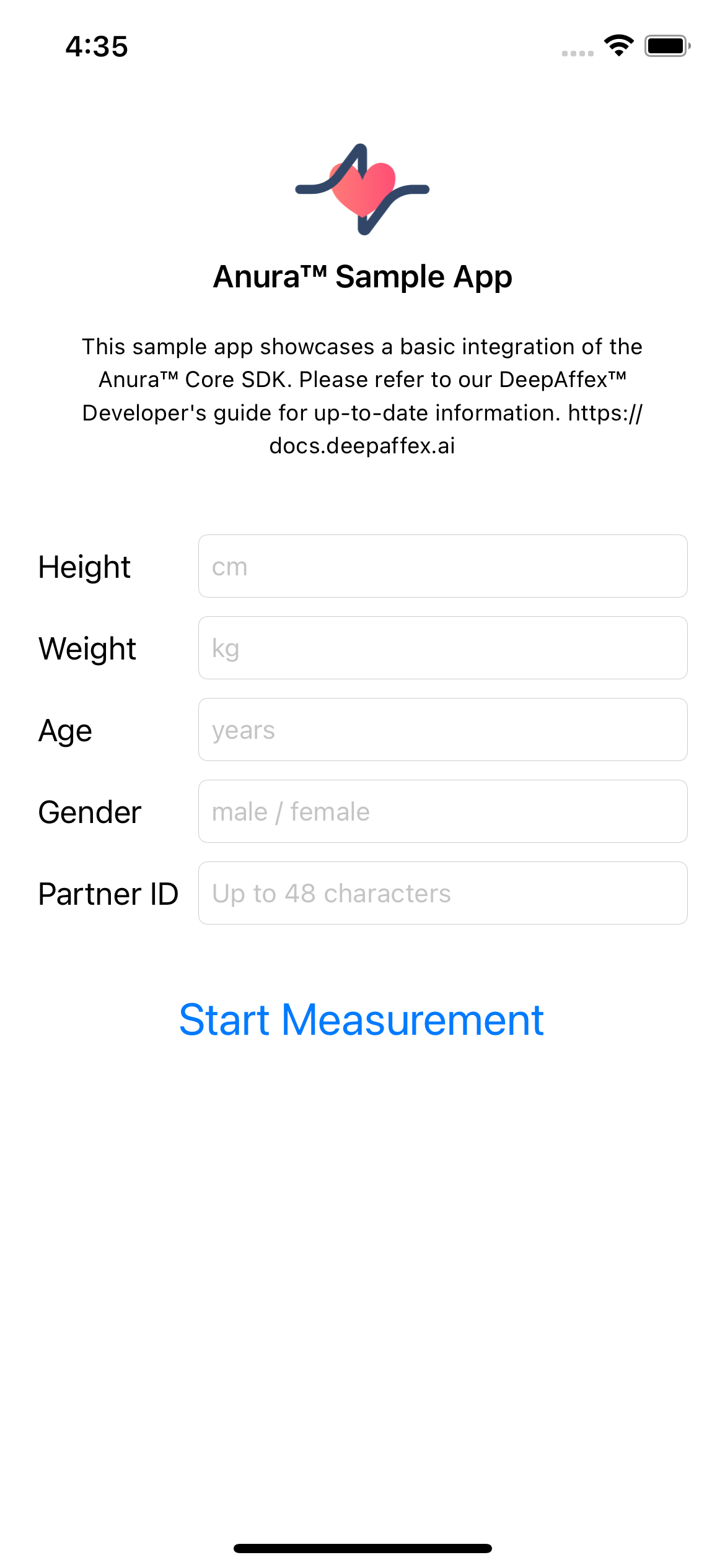

Anura Core iOS Sample App

The sample application for iOS showcases a basic integration of Anura Core in a native app written in Swift. The full source code of the sample application is included, and it demonstrates how to:

- Setup app configuration including DeepAffex License, Study ID, API Hostname

in

Config.swift. - Communicate with DeepAffex Cloud API:

- Register your DeepAffex license.

- Validate API token.

- Create a measurement.

- Send measurement data.

- Receive measurement results through a WebSocket connection.

- Initialize and configure

AnuraMeasurementViewController, the mainUIViewControllerthat displays the Anura measurement UI and controls the camera. - Implement

AnuraMeasurementDelegatemethods, and how to handle various events fromAnuraMeasurementViewController(e.g. face detected, measurement started/completed/cancelled, etc.) - Pass user-provided demographic information (age, sex at birth, height, and weight) as part of the measurement data.

- Decode measurement results from DeepAffex Cloud API, with a basic display of

the final results in a

UITableViewController.

Anura Core SDK for iOS does not include any UI components for displaying results. For more information on how to interpret the results, including colour codes and value ranges for each result, please refer to the DFX Points Reference.

Screenshots

Adapting cameras and face trackers

Camera capture and face tracking are two important parts of the DeepAffex solution and there are numbers of third-party camera and face tracker components available in the market. To allow you to use them in your own app, Anura Core SDK provides adapter interfaces.

Anura Core SDK provides default implementations for camera and face tracker adapters. We recommend using the default implementations as demonstrated in the include Sample Apps.

Camera Adapter

Core SDK for Android provides the CameraAdapter in interface. You can

implement it as shown below:

public interface VideoSource extends Module {

/**

* Creates a camera source instance, which is used to capture video frames and

* pass them to the down streaming pipeline.

* @param name the name of this camera source

* @param core {@link Core} the core instance

* @param format {@link VideoFormat} the format of this video source

* @param cameraAdapter {@link CameraAdapter} the implementation of camera adapter

* @return a camera source instance

*/

static VideoSource createCameraSource(

@NonNull String name,

@NonNull Core core,

@NonNull VideoFormat format,

@NonNull CameraAdapter cameraAdapter) {

return new CameraSourceImpl(name, core, format, cameraAdapter);

}

}

Face Tracker

Core SDK for Android and iOS both provide a face tracker interface:

Android provides FaceTrackerAdapter:

public class UserFaceTracker implements FaceTrackerAdapter {

... ...

}

public interface VideoPipe extends VideoSource {

/**

* Creates a face tracker pipe instance

*

* @param name the name of this pipe

* @param core {@link Core} the core instance

* @param format {@link VideoFormat} the format of this video pipe

* @param faceTracker the implementation of {@link FaceTrackerAdapter}

*/

static VideoPipe createFaceTrackerPipe(

@NonNull String name,

@NonNull Core core,

@NonNull VideoFormat format,

@NonNull FaceTrackerAdapter faceTracker) {

return new FaceTrackerPipeImpl(name, core, format, faceTracker);

}

... ...

}

and iOS provides FaceTrackerProtocol:

public protocol FaceTrackerProtocol {

init(quality: FaceTrackerQuality)

func trackFace(from videoFrame: VideoFrame, completion: ((TrackedFace) -> Void)!)

func lock()

func unlock()

func reset()

var trackingBounds: CGRect { get set }

var quality: FaceTrackerQuality { get set }

var delegate: FaceTrackerDelegate? { get set }

}

Localization

Anura Core SDK allows you to customize the text displayed in the measurement view to suit your application's needs. It utilizes the system's localization features to ensure that the correct language is displayed.

Platform-Specific Localization Guides

Android

For Android, Anura Core SDK uses the strings.xml file of your application for

localization. The Sample Application includes example strings.xml files for

various languages, which you can reference and adapt to your project.

iOS

On iOS, Anura Core SDK utilizes the Localizable.strings file of your

application for localization. The Sample Application also includes example

Localizable.strings files for various languages that you can use as a starting

point.

Available Localization Strings

Below is a list of localized string names/keys used by Anura Core SDK:

| String Name/Key | English Reference |

|---|---|

| MEASURING_COUNTDOWN | Measurement starting in... |

| MEASURING_MSG_DEFAULT | Please centre your face within the outline |

| MEASURING_PERFECT | Perfect! Please hold still |

| MEASURING_STARTED | Measurement Started Please hold still |

| WARNING_CONSTRAINT_BACKLIGHT | The backlight is too strong Please try changing position so more light falls directly on the face |

| WARNING_CONSTRAINT_BRIGHTNESS | Your face is too bright Please try to dim the lights |

| WARNING_CONSTRAINT_DARKNESS | Your face is not well lit Please try better lighting |

| WARNING_CONSTRAINT_DISTANCE | The face is too far Please try to move closer to the camera |

| WARNING_CONSTRAINT_EXPOSURE | Please hold still while the camera is calibrating... |

| WARNING_CONSTRAINT_FPS | The camera is not maintaining the required frame rate Please try closing all background applications |

| WARNING_CONSTRAINT_GAZE | Please look directly into the camera |

| WARNING_CONSTRAINT_MOVEMENT | Too much movement Please hold still |

| WARNING_CONSTRAINT_POSITION | Try to keep your face within the outline |

| WARNING_CONSTRAINT_TOO_CLOSE | Please move a little further away |

| WARNING_CONSTRAINT_TOO_FAR | Please move a little closer |

| WARNING_LOW_POWER_MODE | For better accuracy, please disable Low Power Mode |

| MEASUREMENT_CANCELED | Measurement cancelled |

| ERR_CONSTRAINT_BRIGHTNESS | Your face was too bright Please try to dim the lights |

| ERR_CONSTRAINT_DARKNESS | Your face was not well lit Please try better lighting |

| ERR_CONSTRAINT_DISTANCE | Please move closer to the camera and try again |

| ERR_CONSTRAINT_FPS | The camera is not maintaining the required frame rate Please try closing all background applications |

| ERR_CONSTRAINT_GAZE | Please directly face the camera throughout the measurement |

| ERR_CONSTRAINT_MOVEMENT | Please hold still throughout the measurement |

| ERR_CONSTRAINT_NO_NETWORK | Please make sure you're connected to WiFi or a mobile network |

| ERR_CONSTRAINT_POSITION | Please keep your face centred within the outline throughout the measurement |

| ERR_CONSTRAINT_TOO_FAR | Please stay close to the camera throughout the measurement |

| ERR_MSG_SNR | The blood flow measurement was not reliable Please try to allow the light to fall evenly across your face while holding still |

| ERR_MSG_SNR_SHORT | Blood flow measurement was not reliable. Please try again |

Measurement Questionnaire

Measurement questionnaires are used to further enhance the accuracy of the measurement results. To know more about questionnaires, please refer to the Measurement Questionnaire Section in our developers guide.

Interpreting Results

The DeepAffex Cloud can process blood flow information and provide estimates for many different biological signals. Please refer to the DFX Points Reference for further interpretation of these signals.

Best Practices

DeepAffex License Registration and Token Validation

-

Use the provided DeepAffex license key to register the license by calling the

Organizations.RegisterLicenseendpoint. A pair of tokens (a Device Token and a One-Time Refresh Token) is returned. To learn more about tokens, please refer to the authentication chapter in our developer guide. -

Store tokens safely in the device's secure storage. Tokens must be used for any subsequent DeepAffex API calls.

-

To ensure that a token is valid before making a DeepAffex Cloud API call, you can call the

General.VerifyTokenendpoint which returns the token's status. -

Your application should call

Auths.Renewendpoint if the Device Token has expired. If the Refresh Token has also expired or was not available, your application may need to call theOrganizations.RegisterLicenseendpoint again to obtain a fresh pair of Device and Refresh Tokens

If the ActiveLicense field in the General.VerifyToken response is false,

please contact NuraLogix to renew your DeepAffex license. Your application is

responsible to handle this failure case gracefully.

Measurement Questionnaire and User Demographics

- Upon your app's initial launch, prompt the user to complete the measurement questionnaire. For further details on questionnaires, please refer to the Measurement Questionnaire Section in our developer's guide. This information will enhance the accuracy of future measurements, some of which will depend on these data.

Taking Measurements

-

Your application needs to request camera permission before taking measurements. Anura Core SDK will not work without access to the camera, and your application needs to handle the case when the user denies the camera permission.

-

You can customize the look and feel of the measurement UI though

MeasurementUIConfiguration. To learn more on how to customize the measurement UI, please refer to Measurement UI Customization iOS and Measurement UI Customization Android. -

To obtain measurement results in real-time, your application can set up a WebSocket connection and use the Measurements.SubscribeResults endpoint. After the measurement is complete and all results are received, your app may disconnect the WebSocket connection.

-

Before your app can start sending measurement payloads to obtain results, it must first create a measurement using the Measurement.Create endpoint. The endpoint will return a measurement ID, which is used to refer to the measurement in subsequent API calls.

-

After the measurement starts, each extracted payload needs to be sent to DeepAffex Cloud in sequence. For information about this interface, please refer to the Measurement.AddData endpoint.

-

If your application is subscribed to results over WebSocket, the results can be obtained from the

MeasurementResultsobject. Your application can then display these results on the measurement screen (e.g. heart rate), and on a separate results screen after the measurement is complete. For more information on how to interpret the results, please refer to the DFX Points Reference. -

With Anura Core SDK, you must always take measurements in anonymous mode using Partner ID. Please do not use Users and Profiles related API Endpoints.

Cross-Platform Apps

Anura Core is a native SDK for iOS and Android. It can be integrated into applications that are built using cross-platform frameworks such as React Native, Flutter, Cordova, and Ionic.

We recommend that you follow the official guides of the cross-platform framework of your choice to integrate it into your application. The links below are to the native component integration section in the official guides of some of the most common cross-platform frameworks:

Platform requirements

Camera Resolution:

- Minimum pixel density of ~30 pixels/inch (~12 pixels/cm) applied to the face

- In a mobile phone "selfie portrait" scenario, this converts to an image resolution of 480p (i.e. 640 x 480, 4:3 aspect)

Camera Frame Rate:

- Minimum: 25 frames per second

- Recommended: 30 frames per second

- Accurate frame timestamps (in milliseconds) are required

Face Tracker:

- External face tracker that provides MPEG-4 FBA feature points

- Anura Core SDK includes support for MediaPipe FaceMesh by default. Support for visage|SDK FaceTrack is also included.

Supported CPU Architectures:

- ARMv8-A (64-bit): Android and iOS

Supported OS bindings/wrappers:

- Android: Java and Kotlin

- iOS: Swift and Objective-C

- Cross-Platform: Please refer to the Cross-Platform Apps section for more information

OS Specific Requirements:

Android

-

OS Version: Android 7.1 (API level 25) and later

-

Camera2 API: The platform must support Camera2 API, specifically with

FULLorLEVEL_3support. Platforms that only have Camera2 APILEGACYare not supported by Anura Core SDK. While it may still run, measurement performance cannot be guaranteed.For more information on Camera APIs on Android, please refer to these online resources:

iOS

- Minimum OS Version: iOS 13.0 and later

- iPadOS 13.0 and later are also supported

Platform Performance Guidelines:

- Comparable with Snapdragon 632 or better for Android

- Comparable with Apple A10 or better for iOS

- Minimal RAM footprint (6x frame buffer overhead)

Internet connectivity requirements

The payload data chunks are not very heavy (mostly less than 500 KB). Any stable internet connection which can handle such payloads is expected to work fine.

Troubleshooting

SNR-related issues

Low SNR (Signal-to-Noise Ratio) can lead to measurement cancellations. By default, Anura Core SDK follows the rules given in this guide to cancel a measurement early based on SNR.

For more information about SNR related issues and how to solve them, please refer to this troubleshooting guide.

Visage Face Tracker Issues

As of Anura Core SDK for iOS (1.9.0) and Android (2.4.0), the default face tracker is MediaPipe FaceMesh. This troubleshooting section only applies to applications still using visage|SDK FaceTrack

Managing your Visage License File

Your visage|SDK FaceTrack license is distributed as a file with the extension

.vlc.

-

When you receive your license file, please ensure that the Application ID in it matches your app's bundle id. Please contact NuraLogix if it doesn't.

-

You should treat your license file as a binary file. If you plan to check it into Git, configure it as a binary file using the following code in your

.gitattributesfile:*.vlc -merge -text

Using your Visage license file

-

For Anura Core SDK for Android

-

Copy your visage|SDK FaceTrack license file to

app/src/main/assets/visage/ -

Update your

server.propertiesfile with your visage|SDK FaceTrack license key (typically, it's the filename of the .vlc file excluding the file extension). -

Ensure that the APPLICATION_ID exactly matches the Application ID field in your visage|SDK FaceTrack license file.

-

-

For Anura Core SDK for iOS

- Replace the placeholder license file in

SampleApp/VisageFaceTracker/visage.vlcwith the license file provided by NuraLogix (Do not copy and paste the contents of the license file in sample app visage|SDK FaceTrack file.)

- Replace the placeholder license file in

Visage Licensing Error Codes

Please check the following common error codes if you are facing issues with visage|SDK FaceTrack licensing:

-

Error code 0x00000001 (VS_ERROR_INVALID_LICENSE): This error indicates that the license is not valid. The error occurs when the BundleID is not the same as the one in your application, or when the license that is registered to one product is used with another product. Please check if you’re using the correct license. -

Error code 0x00000002 (VS_ERROR_EXPIRED_LICENSE): This error indicates that your Visage license has expired. Please consider renewing your license. If you obtained your license directly from Visage Technologies, please contact them; if you obtained your license through NuraLogix, please contact Nuralogix. -

Error code 0x00000008 (VS_ERROR_MISSING_KEYFILE): This error indicates that the application cannot locate the license key file. Please verify that the license key file is present in the folder used as a path to initialize the license manager. -

Error code 0x00000100 (VS_ERROR_TAMPERED_KEYFILE): This error indicates that the license key file was modified. Please try using a clean copy of the license key file that was sent to you, and see if the error still occurs. -

Error code 0x00000010 (VS_ERROR_NO_LICENSE): This error indicates that there is currently no license available. -

Error code 0x00001000 (VS_ERROR_INVALID_OS)The error you received indicates that your license key does not support the platform (operating system) on which you are trying to use it.

Anura Core SDK Android 2.4.0 Migration Guide

Anura Core SDK Android 2.4.0 is a major update that includes the following improvements and features:

- Significant camera and face tracker performance improvements

- New measurement UI that utilizes the device's screen as a light source for more accurate and reliable measurements

- Replaced the existing Visage FaceTracker with MediaPipe face tracker for improved performance and simplified integration

- Improved WebSocket handling in poor network conditions

- Simplified measurement results parsing

- DeepAffex token security enhancements

Upgrading to Anura Core Android 2.4.0

A few steps are required to upgrade your existing app from Anura Core 2.3.x to 2.4.0.

Updating SDK Binaries

Starting with Anura Core SDK 2.4.10, SDK dependencies are no longer bundled as

.aar files in the libs folder. Instead, dependencies are now fetched from Maven.

-

Set up access to NuraLogix's Maven by following the instructions at Setting up Maven access.

-

Remove any

.aarfiles previously added manually in thelibsfolder, as they are now managed via Maven. -

Remove the old

mediapipedependencies if present:implementation "com.google.mediapipe:solution-core:$mediaPipeVersion" implementation "com.google.mediapipe:facemesh:$mediaPipeVersion"

Adopting the new UI

Anura Core SDK 2.4.0 features a new measurement UI that utilizes the device's screen as a light source for more accurate and reliable measurements.

Adopting the new measurement UI is as simple as passing a new instance of

MeasurementUIConfiguration to your application's MeasurementView instance:

measurementView.setMeasurementUIConfig(MeasurementUIConfiguration())

If you would like to continue to use the exiting UI, you can use

MeasurementUIConfiguration.anuraDefaultLegacyUIConfiguration instead:

measurementView.setMeasurementUIConfig(MeasurementUIConfiguration.anuraDefaultLegacyUIConfiguration)

The Measurement UI is also now very easy to configure, here are all of the configuration options;

// create a custom UI configuration and set the desired values

val customMeasurementUIConfig = MeasurementUIConfiguration().apply {

measurementOutlineStyle = MeasurementUIConfiguration.MeasurementOutlineStyle.OVAL

showHistograms = true

overlayBackgroundColor = Color.WHITE

measurementOutlineActiveColor = Color.BLACK

measurementOutlineInactiveColor = Color.WHITE

histogramActiveColor = Color.YELLOW

histogramInactiveColor = Color.GREEN

statusMessagesTextColor = Color.CYAN

timerTextColor = Color.BLUE

}

// set the measurement view's configuration

measurementView.setMeasurementUIConfig(customMeasurementUIConfig)

The updated Sample App also includes code to automatically maximize the brightness of the screen when a face is detected and during the measurement. Your existing application should be updated to include this functionality to best utilize the device's screen brightness as a light source.

Face Position & Distance Constraint

As part of the new measurement UI, Anura Core SDK 2.4.0 requires users to come

closer to the device's camera. This is done to allow the light from the device's

screen to illuminate the user's face and get more reliable and accurate

measurements. You should update your application's DfxPipeConfiguration

instance to set CHECK_FACE_DISTANCE to true as shown below:

private fun getDfxPipeConfiguration(): DfxPipeConfiguration {

val dfxPipeConfiguration =

DfxPipeConfiguration(applicationContext, null)

// set face_distance constraint on

dfxPipeConfiguration.setRuntimeParameter(

DfxPipeConfiguration.RuntimeKey.CHECK_FACE_DISTANCE,

true

)

return dfxPipeConfiguration

}

The updated Sample App also includes code to show the users instructions to come

closer before the measurement starts. Please refer to the Sample App's

AnuraExampleMeasurementActivity code, specifically under

onMeasurementConstraint() callback method.

MeasurementPipeline Updates

The updated MeasurementPipeline interface and default implementation utilize a

new MeasurementState class to manage the internal state. Your application can

observe the state as demonstrated in the updated Sample App:

override fun onMeasurementStateLive(measurementState: LiveData<MeasurementState>?) {

measurementState?.observe(this) { newState: MeasurementState ->

handleMeasurementViewState(state = newState)

}

}

Refer to the Sample App's example implementation of

handleMeasurementViewState() in AnuraExampleMeasurementActivity on how to

handle state changes.

The updated MeasurementPipeline also includes a new method for determining if

a measurement should be cancelled early:

measurementPipeline.shouldCancelMeasurement(results.snr, resultIndex)

In previous versions of the Sample App, the measurement early cancellation logic was in the example code. If your application was using that code, you should replace that code with a call to this new method.

MediaPipe Face Tracker

Anura Core Android 2.4.0 includes support for MediaPipe face tracker which provides improved performance, a smaller binary size, and simplifies integration with your application.

To start using MediaPipe, include it as a dependency in your application's

build.gradle:

mediaPipeVersion = "0.10.2"

implementation ("com.google.mediapipe:solution-core:$mediaPipeVersion")

implementation("com.google.mediapipe:facemesh:$mediaPipeVersion")

You may also remove Visage FaceTracker and its dependencies and assets:

anura-visage-2.3.0.aarvisageassets folder- Any

.vlclicense file

MediaPipe is not tied to your application ID, and does not require a separate license file for each variant of your application.

Visage FaceTrack support

If you would like to continue using Visage FaceTrack, please use the updated

anura-visage-2.4.0.XXXX.aar. The updated binaries and Visage assets folder are

included under this repo's /visage directory.

New MeasurementResults Class

The new MeasurementResults class replaces the existing AnalyzerResult and

AnalyzerResult2 classes for parsing real-time results from DeepAffex Cloud.

MeasurementResults has a simple allResults interface to get all the

available results and notes for each point in key/value pairs.

MeasurementResults can be serialized into JSON or parceled for passing to

another Android activity.

AnalyzerResult and AnalyzerResult2 classes are still available in Anura Core

Android 2.4.0, but these are deprecated and will be removed in the future.

MeasurementResults requires kotlinx.serialization to work. If your

application does not use kotlinx.serialization, follow these steps:

- Update

kotlin_versionto '1.8.0' or newer - Add the Kotlin serialization Gradle plugin to your project's

build.gradle:classpath "org.jetbrains.kotlin:kotlin-serialization:$kotlin_version" - Add Kotlin serialization dependency to your application's

build.gradle:implementation "org.jetbrains.kotlinx:kotlinx-serialization-json:1.1.0" - Optional: If your application does not utilize

getDefaultProguardFilein itsbuild.gradlefile, you need to add the proguard rules to your proguard-rules.pro file not to break kotlin-serialization when minifying and obfuscating the code in release mode.

Removing Protobufs

Protobufs are no longer used in Anura Core Android 2.4.0, and can be removed from your application's dependencies.

You may safely stop using the workaround we provided for the duplicate protobuf classes issue if your application had encountered it when using Anura Core SDK 2.3.x and Google Firebase https://github.com/kamalh16/protobuf-javalite-firebase-wellknowntypes.

Improved DeepAffex Cloud API Client

Anura Core Android 2.4.0 includes an improved DeepAffex Cloud API client that can better handle WebSocket connections in poor network conditions.

Your application will automatically get these improvements by simply using the

latest Anura Core SDK Android 2.4.0 binaries and implementing the TokenStore.

Please refer to the included SampleTokenStore for an example.

An important security update to the DeepAffex Cloud API was released on November 28, 2023, which expires tokens after a fixed duration. This will impact your user's experience if you choose to keep using the older Anura Core SDKs. Upgrading to the latest Anura Core SDKs ensures seamless adaptation to these API changes.

Miscellaneous Changes

ProGuard Rules

Anura Core SDK 2.4.0 includes consumer ProGuard rules, so your application will

no longer need to maintain its own ProGuard rules to support Anura Core SDK if

used in conjunction with getDefaultProguardFile in build.gradle:

proguardFiles getDefaultProguardFile('proguard-android-optimize.txt')

You may safely remove existing ProGuard rules from your application that were added to support previous versions of Anura Core SDK.

RestClient

The token has been renamed to actionId.

The endpoint getLicenseStatus has been renamed to verifyToken to better

match the api naming.

The constant RestClient.ACTION_EXPIRE_LICENSE has changed to

RestClient.ACTION_VERIFY_TOKEN

AnuraError

AnuraError Class has been split into either Network related errors or Core

related errors.

For example, AnuraError.LICENSE_EXPIRED is now

AnuraError.Network.LICENSE_EXPIRED

Network errors

RestClient's onError callback now provides AnuraError.NetworkErrorInfo data

class rather than just a message and token number.

The class will now provide the actionId, errorCode, errorMessage and the

responseCode.

Measurement Pipeline Listener

Added callback method onMeasurementFaceDetected that provides a boolean

isFaceDetected.

Added two other callback methods for debugging purposes onCameraFrameRate and

onFaceTrackerFrameRate, which provide the frames per second (fps) measured.

Anura Core SDK iOS 1.9.0 Migration Guide

Anura Core SDK iOS 1.9.0 is a major update that includes the following improvements and features:

- New measurement UI that utilizes the device's screen as a light source for more accurate and reliable measurements

- Replaced the existing visage|SDK FaceTrack with MediaPipe FaceMesh for improved performance and simplified integration

- Improved WebSocket handling in poor network conditions

- DeepAffex token security enhancements

Upgrading to Anura Core iOS 1.9.0

A few steps are required to upgrade your existing app from Anura Core 1.8.x to 1.9.0.

Updating SDK Binaries

Replace the existing AnuraCore.xcframework and libdfx.xcframework frameworks

in your application with the latest versions.

Adopting the new UI

Anura Core SDK 1.9.0 features a new measurement UI that utilizes the device's screen as a light source for more accurate and reliable measurements.

Existing applications will adopt the new measurement UI and behaviour

automatically through the defaultConfiguration property of

MeasurementConfiguration and MeasurementUIConfiguration. If your application

needs to maintain the UI and behaviour of previous versions of Anura Core SDK,

you may use the corresponding defaultLegacyConfiguration

MediaPipe FaceMesh

Anura Core SDK 1.9.0 includes support for MediaPipe FaceMesh which provides improved performance, a smaller binary size, and simplifies integration with your application.

To start using MediaPipe, you will need to add FaceMesh.xcframework to your

application's embedded frameworks. You can simply initialize an instance of

MediaPipeFaceTracker and pass it to AnuraMeasurementViewController during

initialization. Be sure to add <AnuraCore/MediaPipeFaceTracker.h> to your

application's bridging header.

You may also remove visage|SDK FaceTrack and its dependencies and assets:

visageSDK-iOSfolderbdtsdataassets folderlibVisageVision.astatic libraryTFPlugin.xcframeworkdynamic library- Any

.vlclicense files

You may also update your Xcode build target's settings to remove any settings related to visage|SDK FaceTrack:

- Remove

"$(SRCROOT)/Frameworks/visageSDK-iOS/include"from Header Search Paths - Remove

VISAGE_STATICandIOSfrom Preprocessor Macros

Note: MediaPipe is not tied to your application ID, and does not require a separate license file for each variant of your application.

visage|SDK FaceTrack support

If you would like to continue using visage|SDK FaceTrack, you may use the Visage classes and binaries provided in previous versions of Anura Core SDK.

MeasurementResults Changes

MeasurementResults class has been updated to parse the Notes field from

DeepAffex Cloud that provide additional information about the computation of the

results. The allResults property has been changed to return [String: SignalResult] instead of [String: Double], with SignalResult having 2

properties: value: Double and notes: [String].

Your application should be updated to read the value property for each

SignalResult, and the notes property will contain additional information

about the computation of the that result. For more information on notes, please

refer to DeepAffex Cloud API - Subscribe to

Results

endpoint documentation.

Early Measurement Cancellation Logic

AnuraMeasurementViewController includes a new shouldCancelMeasurement(snr: Double, chunkOrder: Int) -> Bool method in AnuraMeasurementViewController to

determine if a measurement should be cancelled early.

In previous versions of the Sample App, the measurement early cancellation logic was in the example code. If your application was using that code, you should replace that code with calling this new method.

Improved DeepAffex Cloud API Client

Anura Core SDK iOS 1.9.0 includes an improved DeepAffex Cloud API client that can better handle WebSocket connections in poor network conditions.

Your application will these improvements by using the updated

DeepAffexMiniAPIClient and MeasurementResultsSubscriber classes included in

the Sample App.

An important security update to the DeepAffex Cloud API was released on November 28, 2023, which expires tokens after a fixed duration. This will impact your user's experience if you choose to keep using the older Anura Core SDKs. Upgrading to the latest Anura Core SDKs ensures seamless adaptation to these API changes.

Your application must use the new beginStartupFlow(_:) method of

DeepAffexMiniAPIClient to ensure that it has a valid token prior to taking a

measurement. This method encapsulates the code that was in previous versions of

StartViewController, and your application should replace that code with

calling the new beginStartupFlow(_:) method.

Anura Core SDK Frequently Asked Questions

Which devices is Anura Core SDK supported on?

Please refer to the Platform requirements page in the Anura Core SDK Developer Guide.

How is Anura Core SDK distributed?

Anura Core SDK is distributed privately via secure Git repositories.

How can I integrate Anura Core into my Android app?

Please refer to the Anura Core Android section in our developer guide.

How can I integrate Anura Core into my iOS app?

Please refer to the Anura Core iOS section in our developer guide.

How can I integrate Anura Core into my web application?

Anura Core is designed for native mobile apps (iOS and Android). To integrate DeepAffex technology into your web app, you can use the NuraLogix Web Measurement Service (WMS).

Where can I find the DeepAffex Extraction Library for Android or iOS?

For Android and iOS, the DeepAffex Extraction Library is embedded into the Anura Core SDK and is not distributed separately.

Can the measurement UI in the Anura Core SDK be customized?

Yes, the UI can be customized.

On iOS, the default colors, fonts, and images can be changed using

MeasurementUIConfiguration. If you need more customization, you can hide all

the existing elements using MeasurementUIConfiguration and implement your own

UI by subclassing AnuraMeasurementViewController or directly adding your own

views to the UIViewController.

On Android, you have direct access to the UI as demonstrated in the sample app.

Are the measurement result dials and screens of Anura Lite part of the Anura Core SDK?

Only the measurement UI is part of the Anura Core SDK. The measurement result dials are not part of the SDK. Other screens, if included, may be different from Anura Lite.

How does the data flow in the SDK? Does the SDK store the face scan data locally and then make an API call to server to get the results? Or will we have to make the API call?

The SDKs and Sample App do not use any local storage, except for storing the API token. The data flow is documented in the Sample Apps and also in our Anura Core SDK Developer Guide.

How to modify the Anura logo at the top of the measurement screen in the Android SDK?

You can use setIconImageResource to change the logo at the top of the measurement

screen. You can use measurementView.showAnuraIcon(false) to hide it.

How to change the Anura logo at the top of the measurement screen in the iOS SDK?

You can use uiConfig.logoImage to change the logo at the top of the measurement

screen. You can set uiConfig.logoImage to nil to hide it.

How to add medical history questionnaire in the Android SDK?

You can access and set the properties of the measurementQuestionnaire as follows:

measurementQuestionnaire.smoking = true

measurementQuestionnair.bloodPressureMedication = true

measurementQuestionnaire.setDiabetes(Diabetes.TYPE1_STRING)

You can also refer to the Measurement Questionnaire section.

How to add medical history questionnaire in the iOS SDK?

An example to set user demographics is given in the MeasurementDelegate.swift file in the Anura Core SDK sample app.

You can follow that example to set the medical history questionnaire. Another way is to modify AnuraUser struct to add

the medical questionnaire.

Release Notes

Below are links to the release notes for the Anura® Core SDK for Android and the Anura® Core SDK for iOS. For each release, we include information about new features being introduced, changes to existing features, fixes to known issues and any necessary workarounds.

To stay informed about future changes, you can subscribe to any of the above release notes pages. Simply click the "Subscribe to these release notes" button on the individual page to receive notifications when new updates are published.

Anura Core SDK Developer Guide Changelog

1.23.0 - 2025-10-29

- Updated the Anura Core Android page and the migration guide as per the Anura® Core SDK for Android 2.4.10

- Updated the package information page to match Anura Core Android SDK 2.4.10

- Added a note about DeepAffex® license on the sample applications page

1.22.0 - 2025-05-16

- Added a new chapter about release notes

- Added information about configuring constraints in the Anura Core iOS SDK

1.21.0 - 2024-12-12

- Made the following updates on the Anura Core Android page:

- Updated demographics section to use

MesurementQuestionnaireclass - Updated the usage of

startMeasurementto includepartner IDchanges - Updated the dependencies section

- Added a section explaining and showing code examples of the various components of the pipeline and how to create a pipeline using them

- Updated demographics section to use

- Fixed a broken link on the Cross-Platform Apps page

1.20.0 - 2024-10-16

- Added information about adding the medical history questionnaire on the FAQ page

- Added a note about not using Users and Profiles related API Endpoints on the best practices page

1.19.0 - 2024-06-21

- Internal improvements

1.18.0 - 2024-05-10

- Added a section on localization

1.17.0 - 2024-05-03

- Language and formatting updates

1.16.0 - 2024-03-01

- Removed a note about iOS Simulator support on Intel-based Macs

1.15.0 - 2023-12-04

- Updated the information about the DeepAffex Cloud API changes in the Android and iOS migration guides

1.14.0 - 2023-11-07

- Updated the iOS Package Information, Anura Core iOS, Sample Applications, Platform Requirements, and Troubleshooting pages

- Updated Sample application images to match Anura Core SDK 1.9.0

- Updated Best Practices chapter with info on DeepAffex token renew

- Added a new chapter - iOS 1.9.0 SDK Migration Guide

- Update release date of the upcoming DeepAffex Cloud API changes

- Added a new FAQ

- Updated the About This Guide page

1.13.0 - 2023-09-20

- Added information about how to modify icons in the Android SDK

- Some language edits

1.12.0 - 2023-08-31

- Added a Frequently Asked Questions section

1.11.0 - 2023-08-04

- Updated the Android Package Information, Anura Core Android, Sample Applications, Platform Requirements, and Troubleshooting pages

- Updated Sample application images to match Anura Core SDK 2.4.0

- Added a new chapter - Android 2.4.0 SDK Migration Guide

1.10.0 - 2023-07-13

- Removed Partner id mentioned on the Sample Applications page

- Added Xamarin under cross platform section

1.9.0 - 2023-05-29

- Added more information about the API levels on the platform requirements page

- Updated the iOS Package Information and the iOS project screenshot on the package information page

- Updated the iOS Core SDK usage description and Measurement UI Customization on the Anura Core iOS page

- Updated the iOS SDK Binaries size based on arm64 architecture on the package information page

1.8.0 - 2023-04-19

- Added an SNR-related issues section on the troubleshooting page

- Updated the iOS Sample App instructions and the

FaceTrackerProtocolcode - Separated iOS and Android best practices sections and updated the iOS part

- Formatting improvements

1.7.0 - 2023-02-14

- Updated

server.propertiesfile info on the Anura Core Android page - Added visage instructions for Android on the troubleshooting page

- Reformatted the troubleshooting page

1.6.0 - 2022-11-28

- Removed content from the questionnaires page and referred to the developers guide

- Removed content from the results section

- Added info about adding a fixed cluster URL in Android sample app

- Added a disclaimer page

- Added a troubleshooting guide

1.5.0 - 2022-10-31

- Added .NET MAUI in cross platform section

- Formatting improvements

1.4.0 - 2022-09-29

- Added a new chapter on platform requirements

- Added Fasting Blood Glucose risk chapter in interpreting results section

- Added Hemoglobin A1C risk chapter in interpreting results section

- Modified interpretation tables for Hypertension risk, Type 2 diabetes risk, Hypercholesterolemia risk, and Hypertriglyceridemia risk.

- Some language edits

1.3.0 - 2022-08-15

- Added information about the acceptable Calculated BMI range

1.2.4 - 2022-06-17

- Added a change log

- Updated best practices chapter with refresh token info

- Added recommendation about user login in best practices chapter

1.2.3 - 2022-06-02

- Updated the extraction pipeline diagram

- Updated sample app screenshots

- Updated iOS SDK version

1.2.2 - 2022-05-12

- Updated package info

- Updated screenshots of sample packages

- Updated the link for the private repo

1.2.1 - 2022-04-07

- Updated the architecture diagram

1.2.0 - 2022-03-31

- Updated SDK architecture diagram indicating extraction library.

1.1.2 - 2022-02-09

- Replaced infinity symbol

- Place quotes around demographics keys.

- Removed older MSI file with name conflict

- Updated the colour tables on metabolic risks pages

- Updated Questionnaire page with more info

- Updated acceptable ranges for user height and weight

- Fixed link to MSI page and renamed it

1.1.1 - 2021-08-13

- Some word edits and spell checks

- Added section for cross-platform apps