Introduction

Transdermal Optical Imaging (TOI)

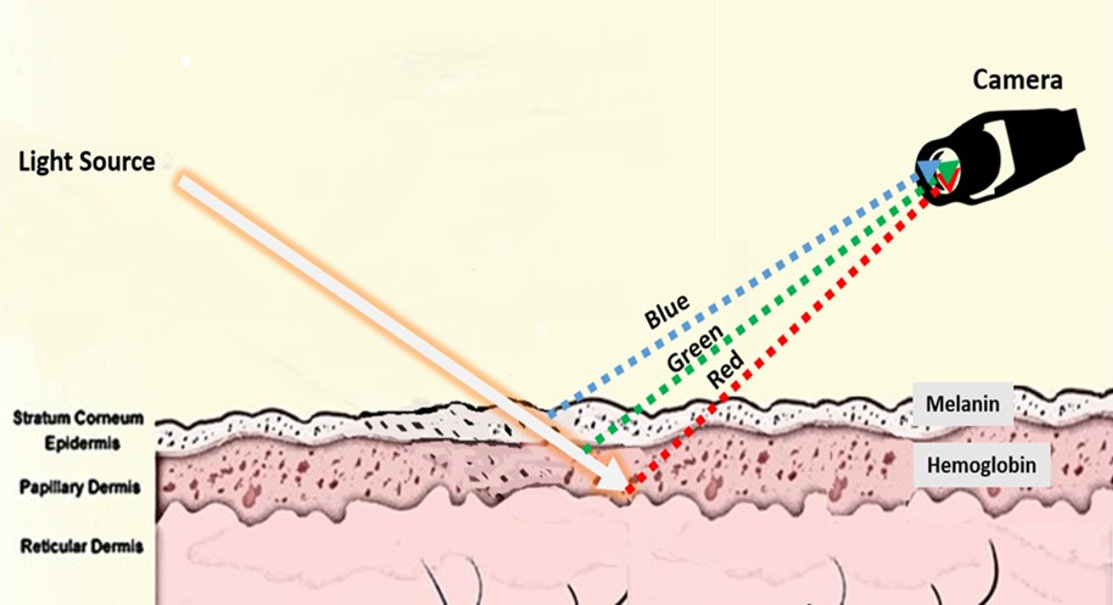

Transdermal Optical Imaging (TOI) is an imaging technology by NuraLogix that uses a digital camera and advanced image processing to extract facial blood-flow data from a human subject.

As a person's heart beats, the color of their skin undergoes minute variations. TOI captures and amplifies these variations and uses them to extract blood-flow information. When done from biologically significant regions of interest of the person's face, the result is called facial blood-flow information.

There are three pre-requisites to extracting useful facial blood-flow information:

-

A continuous sequence of images of a person's face (typically produced by a camera or a video file)

-

Accurate image timestamps (typically produced by the camera or read from the video file) and

-

Facial feature landmarks (typically produced by a face tracking library)

DeepAffex

DeepAffex is NuraLogix's overarching solution for extracting and processing facial blood-flow information.

For desktop applications, you can extract facial blood-flow information using the DeepAffex Extraction Library which implements TOI. Then you can send it to the DeepAffex Cloud to estimate several biological signals such as pulse rate, heart rate variability and more. NuraLogix has also developed several neural network models that can also predict biosignals like blood pressure or health markers like stress index.

The figure below shows the architecture of a DeepAffex-based desktop application:

In addition to the DeepAffex Extraction Library, you will need a method of producing a sequence of images (a camera or a video file,) a face-tracking engine and networking code that communicates with the DeepAffex Cloud, either using HTTP REST or WebSockets.

To develop iOS or Android apps, you should use the higher-level Anura Core SDK. On the web, you should use the Web Measurement Service.

The next chapter walks through a simple Python example that can extract facial blood-flow, send it to the DeepAffex Cloud for processing and display results.