Adapting Face Trackers

The DFX Extraction Library does not provide any built-in facial landmark tracking. Face detection and facial landmark tracking is available as a commodity with implementations like Dlib, Visage etc.

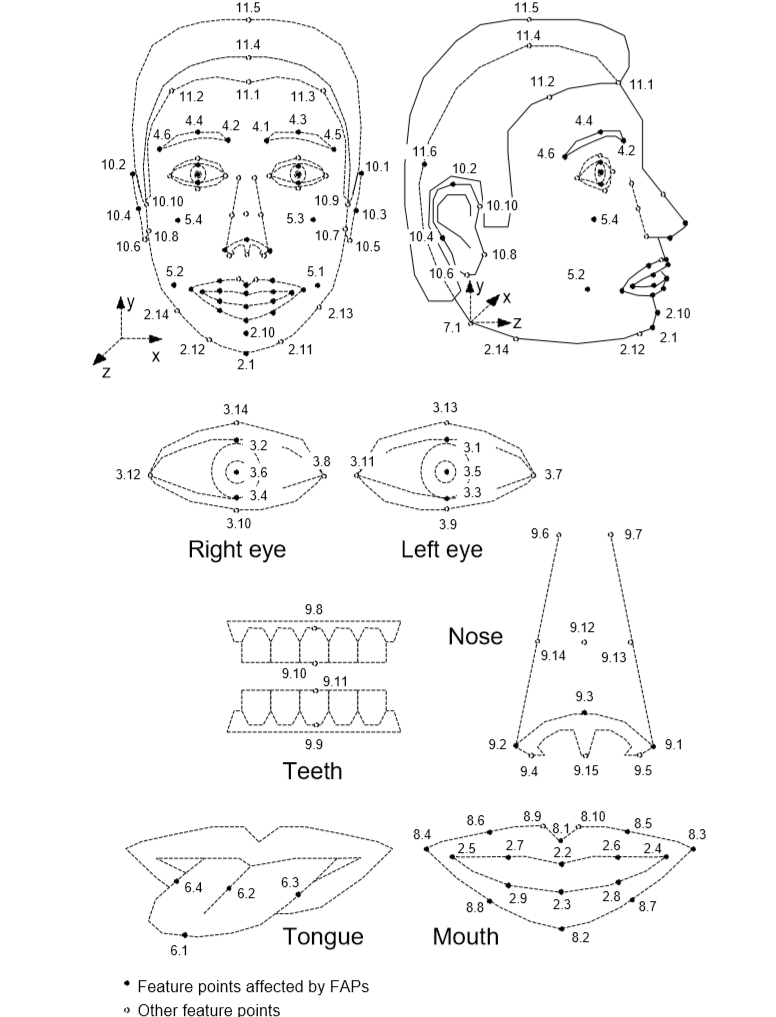

MPEG-4 Facial Data Points

Whatever the output of the face tracking engine, it will need to be mapped

into standard

MPEG-4 Facial Data Points

before being inserted into a DFX Frame as a DFX Face structure.

Please see the _dlib2mpeg4 structure in dlib_tracker.py in the dfxdemo as

an example of such a mapping for the Dlib face tracker. NuraLogix will be

happy to assist you in this process and we already have similar mappings for

several common face tracking engines.

Required Landmarks

The DFX Collector has a method called getRequiredPosePointIDs which will

return a list of MPEG-4 Facial Data Point names that need to be added to the

Face structure for the blood-flow extraction to work correctly. This list

will change base on the study configuration data used to initialize the DFX

Factory.

However, there are currently 15 core facial landmarks used by the majority of DFX Studies. These landmarks and other details are discussed thoroughly in the Required Facial Landmarks section.

DFX Face

A DFX Face structure consists of:

id- unique to one person in a frame. The person's name, a GUID or even a counter will sufficefaceRect- the bounding rectangle of the face in the frameposeValid- true if the posePoints are validdetected- true if the face was detected in this frame, false if the face-tracking information is cached from an older frame and was used to estimate landmark points on this frame.posePoints- a map of MPEG-4 point names and DFXPosePointsattributes- a map of additional face attributes likeyaw,pitchetc.

A DFX PosePoint structure consists of:

x- the X location on the framey- the Y location on the framez- the Z location on the frame (reserved for future use)valid- true if this point was valid in this frameestimated- false if this point was returned by the face tracker, true if it was estimated by interpolating from points that were returned by the face trackerquality- the tracking quality (or probability) of this point (between 0 and 1)

Face-tracking strategies

If the face tracker that you have selected cannot keep pace with the frame rate

of the image source, then you can run the face tracker on a best-effort basis on

a background task and use its most recently produced results (within reason.)

Please be sure to set the detected and estimated fields correctly when using

cached data.

Currently, the DFX solution can better tolerate some inaccuracies in PosePoint

locations better than dropped frames. (This may change in the future and a

different face tracker strategy may be more appropriate.)

Required Facial Landmarks

Each DeepAffex Study potentially requires a unique set of facial landmarks.

However, there are currently

15 core facial landmarks used by the majority of

studies. These points provide details to the DFX Collector from which it is

able to identify the regions of interest for blood-flow extraction.

In addition, there are 44 visual facial landmarks which are used for animation and rendering of which do not affect the measurement quality.

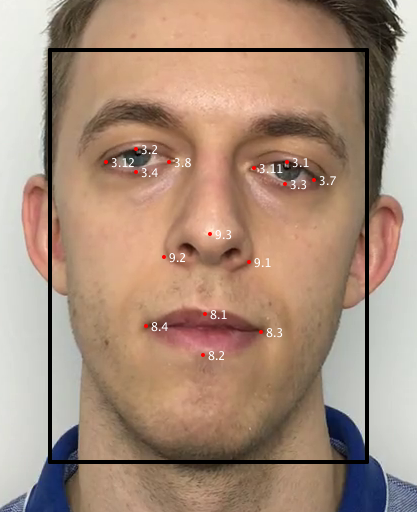

Core Measurement Points

The 15 core facial data points are identified in this image by the red dot at the location and with the corresponding white label. The black box is the detected face rectangle provided to the Extraction Library along with the face points.

In addition to providing the black face bounding box, the 15 required point names are:

3.1, 3.2, 3.3, 3.4, 3.7, 3.8, 3.11, 3.12, 8.1, 8.2, 8.3, 8.4, 9.1, 9.2, 9.3

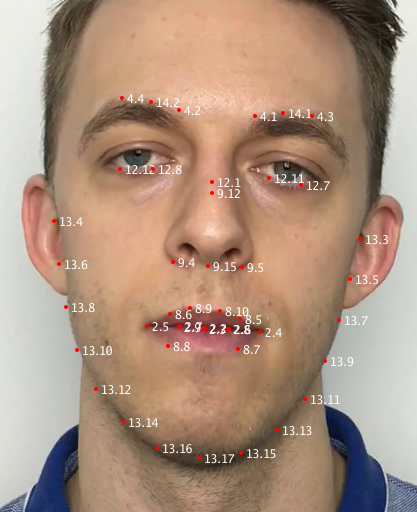

Visual Animation Points

There are 44 animation points which are used to construct the visuals which end users typical see along the contour of the face. These points do not need to be tracked (or mapped) as accurately as the core measurement points as they are only for aesthetic purposes. It is actually difficult for most face engines to accurately track the outer profile of a face and so these points are anticipated to have much more inaccuracy in general. You can see in the positioning of the group 13 series below how they have drifted from the edge of the face in the following diagram.

The 44 points used for visual animation include:

2.2, 2.3, 2.4, 2.5, 2.6, 2.7, 2.8, 2.9, 4.1, 4.2, 4.3, 4.4, 8.10, 8.5, 8.6, 8.7, 8.8, 8.9, 9.12, 9.15, 9.4, 9.5, 12.1, 12.11, 12.12, 12.7, 12.8, 13.10, 13.11, 13.12, 13.13, 13.14, 13.15, 13.16, 13.17, 13.3, 13.4, 13.5, 13.6, 13.7, 13.8, 13.9, 14.1, 14.2

Raw Data

In order to help facilitate mapping a face tracker to the MPEG-4 points that the library leverages, the raw data for the annotated image is:

The first line in the CSV file is a header describing the data.

The next two lines are for the top left corner of the bounding box (box.tl),

and the bottom right corner of the bounding box (box.br), respectively.

The rest of the data lines are for 174 landmarks points provided by the tracker

we used to produce this data.